🔥Building a Slack Bot with AI Capabilities - From Scratch! Part 4: Serverless with Lambda🔥

aka, I don't want to maintain infrastructure ever again

This blog series focuses on presenting complex DevOps projects as simple and approachable via plain language and lots of pictures. You can do it!

These articles are supported by readers, please consider subscribing to support me writing more of these articles <3 :)

This article is part of a series of articles, because 1 article would be absolutely massive.

Part 1: Covers how to build a slack bot in websocket mode and connect to it with python

Part 4 (this article): How to convert your local script to an event-driven serverless, cloud-based app in AWS Lambda

Part 7: Streaming token responses from AWS Bedrock to your AI Slack bot using converse_stream()

Part 8: ReRanking knowledge base responses to improve AI model response efficacy

Part 9: Adding a Lambda Receiver tier to reduce cost and improve Slack response time

Hey all!

Welcome to part 4 of this very large series about building an AI chatbot. Thus far, we’ve built a Slack App, and configured it to send webhooks to our ngrok instance, which is relaying requests to a local python script which constructs conversation context from slack threads, relays the requests over to Bedrock’s hosted instance of Claude Sonnet v3, and relays the responses back to a slack thread.

That’s a lot, so here’s a picture of what is going on so far:

And we’re going to update our architecture to no longer rely on a single server - we’re going to deploy our python application on an AWS Lambda serverless resource, and give it IAM permissions to do whatever it needs (no keys or static passwords on the AWS side), and then we’ll update our Slack App to send webhooks to lambda.

We’re also going to implement Bedrock Guardrails, which can police the inputs and outputs for our model to control some of the worst hallucinations the model might produce.

All of these changes will be implemented using terraform, and you can absolutely use a CI/CD solution to track changes to your application, and deploy on merge.

This entire solution is built for enterprise resiliency and deployment

All the python, terraform, and binary ARM dependency layers are provided in the GitHub repo here:

Feel free to go check it out and deploy it all you want! This article will talk through the how and why of putting this local application into lambda.

With all that said, lets get started building our AWS lambda, IAM, and Bedrock resources in AWS, and then we’ll go over the python changes required to support lambda.

Terraform to Build AWS Resources

This article is going to be filled with AWS resources, but taking pictures of the console is a waste of time - it just changes too much! Plus, terraform is awesome <3 so let’s use that instead.

First, lets establish our provider. We’re going to build two (one in us-east-1 for the lambda, and one in us-west-2 for the bedrock resources) for a very silly reason: when I was building this bot, the AI services in us-east-1 weren’t working. Our AWS TAM confirmed the Bedrock in us-east-1 is spotty as they build the services, and recommended we use Uw2 for AI stuff, so I moved all AI stuff to Uw2. Since then, everything has been perfect.

Then on line 21 we call our lambda module, which is local to our repo here. Note how we’re passing the named providers down to the module so it can also reference them, and build stuff in different regions.

| # Define Terraform provider | |

| terraform { | |

| required_version = "~> 1.7" | |

| required_providers { | |

| aws = { | |

| version = "~> 5.77" | |

| source = "hashicorp/aws" | |

| } | |

| } | |

| } | |

| # Download AWS provider | |

| provider "aws" { | |

| region = "us-east-1" | |

| } | |

| # Provider in AI region | |

| provider "aws" { | |

| alias = "west2" | |

| region = "us-west-2" | |

| } | |

| # Build lambda | |

| module "devopsbot_lambda" { | |

| source = "./lambda" | |

| # Pass providers | |

| providers = { | |

| aws = aws | |

| aws.west2 = aws.west2 | |

| } | |

| } |

Bedrock Guardrail with Terraform

Now lets build our “guardrail” - that’s a resource that’s part of the Bedrock suite that can filter requests to Bedrock and responses back from Bedrock. We’ll walk through this file. There’s a few differently really useful things we can configure, illustrated below:

Line 7 - the content policy, with several different types. The one shown is “INSULTS”, with a medium level of strictness.

Because it’s hard to find the list in the terraform docs or the Bedrock docs, the keywords used for “type” are: INSULTS, MISCONDUCT, SEXUAL, VIOLENCE, PROMPT_ATTACK, HATE

Line 14 - you’re able to filter sensitive information from making it to the model, and also to filter any sensitive information the model might send back to your users.

It’s notable here that Bedrock models run within AWS’s control, even 3rd party models, and those 3rd parties have no ability to read your data or practices, nor does AWS.

Line 20 is more complex - the other two types use keywords that are already established in AWS. “topics_policy_config” permits you to define new categories (“topics”) that should be policed. See the example below that includes an example question, with a category name. This can be very helpful for other types of information your model shouldn’t be providing (“should I be making more money?”, “what do you think about company xxx”, “tell me a dirty joke”, etc.)

| resource "aws_bedrock_guardrail" "guardrail" { | |

| provider = aws.west2 | |

| name = "DevOpsBotGuardrail" | |

| blocked_input_messaging = "Your input has been blocked by our content filter. Please try again. If this is an error, discuss with the DevOps team." | |

| blocked_outputs_messaging = "The output generated by our system has been blocked by our content filter. Please try again. If this is an error, discuss with the DevOps team." | |

| description = "DevOpsBot Guardrail" | |

| content_policy_config { | |

| filters_config { | |

| input_strength = "MEDIUM" | |

| output_strength = "MEDIUM" | |

| type = "INSULTS" | |

| } | |

| # ... | |

| sensitive_information_policy_config { | |

| pii_entities_config { | |

| action = "ANONYMIZE" | |

| type = "US_SOCIAL_SECURITY_NUMBER" | |

| } | |

| } | |

| topic_policy_config { | |

| topics_config { | |

| name = "investment_topic" | |

| examples = ["Where should I invest my money ?"] | |

| type = "DENY" | |

| definition = "Investment advice refers to inquiries, guidance, or recommendations regarding the management or allocation of funds or assets with the goal of generating returns." | |

| } | |

| } | |

| } |

Now lets build the Lambda!

Lambda w/ Terraform

We’ll be walking through this file to build and deploy our lambda.

First, lets call our general data sources. Line 5 will get us our AWS account ID, which we’ll use for some IAM policy docs, and line 7 will get us our current region.

| ### | |

| # General data sources | |

| ### | |

| # Current AWS account id | |

| data "aws_caller_identity" "current" {} | |

| # Region | |

| data "aws_region" "current" {} |

Next we need to fetch the ARN of the secret in secrets manager that stores our slack secrets. You’ll have already created this in an earlier article in this series, but if not, go create it with the format on line 11.

Make sure the name on line 7 matches the exact name of your secret, and the region should be the default region for our AWS resources, in this example us-east-1.

| ### | |

| # Fetch secret ARNs from Secrets Manager | |

| # We don't want to store sensitive information in our codebase (or in terraform's state file), | |

| # so we fetch it from Secrets Manager | |

| ### | |

| data "aws_secretsmanager_secret" "devopsbot_secrets_json" { | |

| name = "DEVOPSBOT_SECRETS_JSON" | |

| } | |

| /* | |

| This secret should be formatted like this: | |

| {"SLACK_BOT_TOKEN":"xoxb-xxxxxx-arOa","SLACK_SIGNING_SECRET":"2cxxxxxxxxxxxxda"} | |

| */ |

IAM For Days

Next we configure who is permitted to assume the role we’re about to create. We want the lambda service to be able to assume it, and we aren’t using any further conditions (but feel free to lock down to a particular lambda if you want to be extra secure!).

IAM is a little finicky. It’s the best and worst part of AWS. The superpower here is never using static keys again, ever. Nothing to leak, nothing to lose.

On line 11 we create the IAM role we’ll be using for our lambda’s permissions. Note no policy yet, so no permissions granted.

| data "aws_iam_policy_document" "DevOpsBotIamRole_assume_role" { | |

| statement { | |

| effect = "Allow" | |

| principals { | |

| type = "Service" | |

| identifiers = ["lambda.amazonaws.com"] | |

| } | |

| actions = ["sts:AssumeRole"] | |

| } | |

| } | |

| resource "aws_iam_role" "DevOpsBotIamRole" { | |

| name = "DevOpsBotIamRole" | |

| assume_role_policy = data.aws_iam_policy_document.DevOpsBotIamRole_assume_role.json | |

| } |

Next, some IAM policy docs. First, we’re going to grant our lambda the rights to read the secret we looked up above, which we do on line 9-20.

We also need to grant our lambda the rights to list all secrets in order to validate that our secret exists, so we grant that against “*” target.

| resource "aws_iam_role_policy" "DevOpsBotSlack_ReadSecret" { | |

| name = "ReadSecret" | |

| role = aws_iam_role.Ue1TiDevOpsBotRole.id | |

| policy = jsonencode( | |

| { | |

| "Version" : "2012-10-17", | |

| "Statement" : [ | |

| { | |

| "Effect" : "Allow", | |

| "Action" : [ | |

| "secretsmanager:GetResourcePolicy", | |

| "secretsmanager:GetSecretValue", | |

| "secretsmanager:DescribeSecret", | |

| "secretsmanager:ListSecretVersionIds" | |

| ], | |

| "Resource" : [ | |

| data.aws_secretsmanager_secret.devopsbot_secrets_json.arn, | |

| ] | |

| }, | |

| { | |

| "Effect" : "Allow", | |

| "Action" : "secretsmanager:ListSecrets", | |

| "Resource" : "*" | |

| } | |

| ] | |

| } | |

| ) | |

| } |

Next up, we need to grant our lambda the ability to trigger our Bedrock models. There’s a few different kinds of permissions we need to grant. Lets walk through them.

On line 10-14 we grant the ability to call foundational models, like Anthropic Sonnet. You can optionally limit to specific minor versions of these models if you’d like. I trust this lambda to call any model, so I’m using a star for any foundational model in us-west-2 region.

Next, we need to grant the ability for our lambda to call Guardrails in the API request. Again, we trust our lambda to call any guardrail, so we’re targeting a star “*” in our account in the target model region.

On line 22-29, we’re granting this lambda the rights to use knowledge bases in us-west-2 to get contextual vectors for requests from your private data sources, like Confluence… wait, that’s a preview of the next article! Pretend you didn’t read this ;)

| resource "aws_iam_role_policy" "DevOpsBotSlack_Bedrock" { | |

| name = "Bedrock" | |

| role = aws_iam_role.Ue1TiDevOpsBotRole.id | |

| policy = jsonencode( | |

| { | |

| "Version" : "2012-10-17", | |

| "Statement" : [ | |

| # Grant permission to invoke bedrock models of any type in us-west-2 region | |

| { | |

| "Effect" : "Allow", | |

| "Action" : "bedrock:InvokeModel", | |

| "Resource" : "arn:aws:bedrock:us-west-2::foundation-model/*" | |

| }, | |

| # Grant permission to invoke bedrock guardrails of any type in us-west-2 region | |

| { | |

| "Effect" : "Allow", | |

| "Action" : "bedrock:ApplyGuardrail", | |

| "Resource" : "arn:aws:bedrock:us-west-2:${data.aws_caller_identity.current.account_id}:guardrail/*" | |

| }, | |

| # Grant permissions to use knowledge bases in us-west-2 region | |

| { | |

| "Effect" : "Allow", | |

| "Action" : [ | |

| "bedrock:Retrieve", | |

| "bedrock:RetrieveAndGenerate", | |

| ], | |

| "Resource" : "arn:aws:bedrock:us-west-2:${data.aws_caller_identity.current.account_id}:knowledge-base/*" | |

| }, | |

| ] | |

| } | |

| ) | |

| } |

Next we need a foundational lambda IAM role - the ability to write logs! We want our lambda to be able to put logs into cloudwatch, and we have to grant that ability.

First, we grant our lambda the ability to create log groups (line 9-13), and then put logs into it (line 14-22).

| resource "aws_iam_role_policy" "DevOpsBotSlackTrigger_Cloudwatch" { | |

| name = "Cloudwatch" | |

| role = aws_iam_role.DevOpsBotIamRole.id | |

| policy = jsonencode( | |

| { | |

| "Version" : "2012-10-17", | |

| "Statement" : [ | |

| { | |

| "Effect" : "Allow", | |

| "Action" : "logs:CreateLogGroup", | |

| "Resource" : "arn:aws:logs:us-east-1:${data.aws_caller_identity.current.id}:*" | |

| }, | |

| { | |

| "Effect" : "Allow", | |

| "Action" : [ | |

| "logs:CreateLogStream", | |

| "logs:PutLogEvents" | |

| ], | |

| "Resource" : [ | |

| "arn:aws:logs:${data.aws_region.current.name}:${data.aws_caller_identity.current.id}:log-group:/aws/lambda/DevOpsBot:*" | |

| ] | |

| } | |

| ] | |

| } | |

| ) | |

| } |

Creating an ARM Lambda Layer for Slack Bolt

Next, we’re going to create a ZIP file of the python3.12 dependencies for slack bolt so we can create a lambda layer.

Lets talk foundational concepts in order to understand what’s going on here.

The underlying compute that lambda runs on has lots of (usually AWS-centric) dependencies. That’s great! It means we don’t need to do anything special to get boto3, that we’ll use to talk to AWS APIs.

However, it obviously doesn’t have everything, and Slack Bolt, the dependency set we’ll use to receive and process slack webhooks, isn’t included. How should we include it?

Well, on each launch we could have our service go and get the dependency for slack bolt and install it, but that’s a wildly repetitive and data-heavy task for a lambda. Lambdas are intended to run super fast and terminate, not to go download dependencies (as much as we can avoid it!).

The clever solution the lambda engineering team established was to build “layers”. That’s a package of software that can be applied to a lambda and instantaneously be available to the lambda on start. There’s some great built-in layers from AWS that we’ll talk about shortly, but we’re going to create our own.

I’m on an Apple M3 Pro, which’s CPU is ARM-based (rather than Intel). To make my life easier, I’m going to run this lambda in ARM mode (you can choose the CPU architecture of your lambda when you create it). ARM has some side benefits, like being slightly less energy intensive, and slightly faster than Intel chipsets. I’m not an expert here though, I’m doing this because it’s convenient.

You don’t need to run any of these commands if you’re using my code in github. This is a walkthrough of how I built the ZIP binary that’s in that repo (or if you don’t trust that binary, and want to compile it yourself (which you should do, why do you trust me? Go make it yourself!)

On line 2 we establish a virtual environment with Python 3.12 explicitly, which is necessary since Mac prefers Python 3.11 as of this publishing.

Then we flip on the venv, on line 4.

Then we remove any old files on line 6, and build the file path we’ll need on line 7.

Then on line 8 we use pip3 to install slack bolt to a particular path (this path is annoyingly required for lambda layers, since it will look in this location for dependencies.

And then, commented out on line 12, we created the zip file using that command.

Again, you don’t need to do any of this. You can leave that command commented out. It’s included to show how we got this binary file (or for you to go build it yourself). I included the binary file in the github repo.

| # Create new python 3.12 venv | |

| python3.12 -m venv . | |

| # Activate new env | |

| source ./bin/activate | |

| # Remove old files and create new ones | |

| rm -rf lambda/slack_bolt | |

| mkdir -p lambda/slack_bolt/python/lib/python3.12/site-packages/ | |

| pip3 install slack_bolt -t lambda/slack_bolt/python/lib/python3.12/site-packages/. --no-cache-dir | |

| */ | |

| # Committing the zip file directly, rather than creating it, so don't have to commit huge number of files | |

| # data "archive_file" "slack_bolt" { | |

| # type = "zip" | |

| # source_dir = "${path.module}/slack_bolt" | |

| # output_path = "${path.module}/slack_bolt_layer.zip" | |

| # } |

Next we actually build the slack bolt lambda layer.

On line 2, we give it a name. We’ll see this in the console.

On line 3, we tell it to go find the binary zip file in our module path.

On line 4, we tell it to sha256 the zip file, and if it changes, update the layer (which will matter more if you’re adding other stuff to that layer).

On line 6, we establish which runtimes are compatible. Since we’re defining the python 3.12 path, it can only be python 3.12.

Then on line 7 we establish the chipsets that can use it. Some tools will be cross-compatible with different chipsets, but I tested the default build of slack bolt, and it’s not compatible in a few libraries, so this is locked to specifically what we’ll establish our lambda as using.

| resource "aws_lambda_layer_version" "slack_bolt" { | |

| layer_name = "SlackBolt" | |

| filename = "${path.module}/slack_bolt_layer.zip" | |

| source_code_hash = filesha256("${path.module}/slack_bolt_layer.zip") | |

| compatible_runtimes = ["python3.12"] | |

| compatible_architectures = ["arm64"] | |

| } |

Lets Build That Lambda

Next, lets actually build our lambda. Lambda doesn’t let you pass the code directly in, it wants a zip file. And terraform lets us build a zip file, so lets do that.

On line 4, we tell it where our python code is, and on line 5, we tell it where to put the zip file.

| # Zip up python lambda code | |

| data "archive_file" "devopsbot_slack_trigger_lambda" { | |

| type = "zip" | |

| source_file = "python/devopsbot.py" | |

| output_path = "${path.module}/devopsbot.zip" | |

| } |

Next, here’s our lambda function terraform.

On line 2, we point at the zip file we just built, and on line 3, we assign a name to our lambda.

On line 4 we assign the IAM role we built above to the lambda, to enable it to do stuff in AWS and spend some money on model calls.

On line 5, we assign a “handler”. This is basically an instruction to the underlying language that’s run on what file and function to launch. When the function launches, it’ll unzip the lambda zip file, which will leave a single file: devopsbot.py. This handler says to find the file called devopsbot (in the default language extension, which is .py for python) and run the function “lambda_handler”. Now, we haven’t built lambda handler yet - we didn’t need it for previous articles, so we’ll build that next after this terraform stuff.

On line 7 we assign the memory size. Memory and CPU are assigned together, so bumping the memory gives the function more CPU.

On line 8, we assign python 3.12, and on line 9, we assign architecture arm64 only.

Line 10, “publish” asks whether to publish a new version of the lambda when a change is detected. If you have some other automation to test your code before publishing a new version, set this to false. I test locally and in dev envs, so I want to publish when we change our code.

On line 13, we assign some layers. First, line 15, we assign the “AWS-Parameters-and-Secrets-Lambda-Extension-Arm64” of version :12, a layer provided by AWS to help fetch secrets rapidly and securely from AWS. 12 is the latest version.

And on line 18, we tell our lambda to use the layer we built above, on line 18.

And finally, on line 21, the source code hash that tells our lambda to update when the zip file that represents our lambda code is updated. This is necessary for terraform to detect that zip file has changed, and push a new version.

| resource "aws_lambda_function" "devopsbot_slack" { | |

| filename = "${path.module}/devopsbot.zip" | |

| function_name = "DevOpsBot" | |

| role = aws_iam_role.DevOpsBotIamRole.arn | |

| handler = "devopsbot.lambda_handler" | |

| timeout = 30 | |

| memory_size = 512 | |

| runtime = "python3.12" | |

| architectures = ["arm64"] | |

| publish = true | |

| # Layers are packaged code for lambda | |

| layers = [ | |

| # This layer permits us to ingest secrets from Secrets Manager | |

| "arn:aws:lambda:us-east-1:${data.aws_caller_identity.current.id}:layer:AWS-Parameters-and-Secrets-Lambda-Extension-Arm64:12", | |

| # Slack bolt layer to support slack app | |

| aws_lambda_layer_version.slack_bolt.arn, | |

| ] | |

| source_code_hash = data.archive_file.devopsbot_slack_trigger_lambda.output_base64sha256 | |

| } |

To finish off our lambda terraform, lets create a lambda alias called “Latest” (with a capital L, as opposed to “latest”, the implicit latest alias. Yes, I suck at naming. Please rename this to something better!).

We need an alias to point at the latest published version so we can point line 8, the Function URL, at it. Function URLs are excellent - they let an inbound html POST trigger the function, and the Function URL points at a particular Alias.

And finally, on line 14, we create an output to print the function URL web address. We’ll use this later when we update our slack app to send POSTs to our Lambda, instead of our local ngrok install.

| # Publish alias of new version | |

| resource "aws_lambda_alias" "devopsbot_alias" { | |

| name = "Latest" | |

| function_name = aws_lambda_function.devopsbot_slack.arn | |

| function_version = aws_lambda_function.devopsbot_slack.version | |

| } | |

| # Point lambda function url at new version | |

| resource "aws_lambda_function_url" "DevOpsBot_Slack_Trigger_FunctionUrl" { | |

| function_name = aws_lambda_function.devopsbot_slack.function_name | |

| authorization_type = "NONE" | |

| qualifier = aws_lambda_alias.devopsbot_alias.name | |

| } | |

| # Print the URL we can use to trigger the bot | |

| output "DevOpsBot_Slack_Trigger_FunctionUrl" { | |

| value = aws_lambda_function_url.DevOpsBot_Slack_Trigger_FunctionUrl.function_url | |

| } |

That’s a few different moving parts, so lets talk about what’ll happen when you want to update this code:

You make a change to the python code and test it locally. You save your changes and are ready to publish a version.

You do a PR against the python code source with your changes, and merge it.

Terraform in your CI (or from local, if you’re feeling adventurous) will trigger. It’ll:

Hash your code, and notice there’s a change. It’ll recreate the python zip file.

The lambda will notice the SHA256 of the zip file has changed, and will publish a new version of the code.

The “Latest” alias we just created will notice the new version and will update the alias to point at the latest version.

The Function URL doesn’t change. That’s really important. It means that once you set the webhook address in slack app configuration, you don’t need to change it each time you make a change to your python code.

And that’s just awesome.

Let’s talk Python changes before we go update our slack app.

Python + Lambda == <3

You’d think we’re done, and we just need to apply our terraform, but there are some things we need to add, because Lambda and local development just work a little differently.

For one, how we fetch secrets is different - in our local version, we establish an AWS client and do a GET using the API to get the secret value. In Lambda, we use the SSM layer, which works differently (and is way faster!).

Let’s process that change before we move on to other differences.

Since we have a function call to fetch our secrets in local mode, lets just create a slightly modified function that can fetch secrets using the SSM layer.

On line 2, we accept a secret name (notably not a region, I believe the SSM layer assumes same-region secret access and I didn’t investigate over-riding that because I didn’t need to).

On line 3 we define our SSM endpoint as “localhost” on port 2773, which looks weird at first glance. The SSM layer that launches first on lambda runs starts a local web listener on http/2773 to process requests. Both are default values.

On line 6 we create our headers for the fetch. Also default, the SSM layer exports the “AWS_SESSION_TOKEN” as an env var for code to read, so we don’t have to fetch, construct, or store an additional secret to fetch other secrets.

On line 10, we fetch the secret, and if we have an error we raise it on line 13.

If it all worked, we return the secret on line 22.

| # Get the secret using the SSM lambda layer | |

| def get_secret_ssm_layer(secret_name): | |

| secrets_extension_endpoint = "http://localhost:2773/secretsmanager/get?secretId=" + secret_name | |

| # Create headers | |

| headers = {"X-Aws-Parameters-Secrets-Token": os.environ.get('AWS_SESSION_TOKEN')} | |

| # Fetch secret | |

| try: | |

| secret = requests.get(secrets_extension_endpoint, headers=headers) | |

| except requests.exceptions.RequestException as e: | |

| print("Had an error attempting to get secret from AWS Secrets Manager:", e) | |

| raise e | |

| # Print happy joy joy | |

| print("🚀 Successfully got secret", secret_name, "from AWS Secrets Manager") | |

| # Decode secret string | |

| secret = json.loads(secret.text)["SecretString"] # load the Secrets Manager response into a Python dictionary, access the secret | |

| # Return the secret | |

| return secret |

The input is received a little differently also. In local mode, we start some slack listeners that just keep running and processing messages. But in lambda-land, we receive a single inbound POST and need to process it.

It’s interestingly invalid json, because the outer json uses valid double-quoted strings, and the inner json (our slack webhook) is encapsulated with single quotes. In order to decode that payload, we need to convert it to a dictionary with a new function.

On line 1, we receive the event. This is the outer json payload with everything in it (including headers and stuff).

On line 3, we dump it with json, and then on line 4, convert to a dictionary.

On line 7, we read the “body”, then use json.loads to format it as valid json on line 8.

Then we return the body on line 11.

| def isolate_event_body(event): | |

| # Dump the event to a string, then load it as a dict | |

| event_string = json.dumps(event, indent=2) | |

| event_dict = json.loads(event_string) | |

| # Isolate the event body from event package | |

| event_body = event_dict["body"] | |

| body = json.loads(event_body) | |

| # Return the event | |

| return body |

Finally, lets build the actual lambda_handler function, which our lambda calls directly.

On line 6, we isolate the event_body (the slack webhook payload) from the “event” (the outer payload that lambda provides to use).

On line 9, we check for duplicate events by passing our event headers and event content. If it’s a duplicate message, we return an http/200 and exit.

There’s a really long answer for why we do this, but short answer is we should use an async calling method to return a response to slack within the 3 second time-limit, which I’m not (yet) doing, so slack thinks we’ve timed out a lot and sends new messages. I believe I’m catching all these with this check, but a better engineer than me would use a front-door lambda that’d immediately return a healthy response, and then pass the information to a second-tier lambda. That’s beyond the scope of what we’re building today, but maybe in a future article!

On line 15, we have to have a special handler for the “challenge” behavior that slack does on first add of the webhook destination - it makes sure you’re listening and behaving how it expects a webhook destination to behave, so we do.

On line 22, we print the event for debugging, and on line 25 we go fetch our secrets package from the SSM lambda layer (super fast!).

| def lambda_handler(event, context): | |

| print("🚀 Lambda execution starting") | |

| # Isolate body | |

| event_body = isolate_event_body(event) | |

| # Check for duplicate event or trash messages, return 200 and exit if detected | |

| if check_for_duplicate_event(event["headers"], event_body["event"]): | |

| return generate_response( | |

| 200, "❌ Detected a re-send or edited message, exiting" | |

| ) | |

| # Special challenge event for Slack. If receive a challenge request, immediately return the challenge | |

| if "challenge" in event_body: | |

| return { | |

| "statusCode": 200, | |

| "body": json.dumps({"challenge": event_body["challenge"]}), | |

| } | |

| # Print the event | |

| print("🚀 Event:", event) | |

| # Fetch secret package | |

| secrets = get_secret_ssm_layer(bot_secret_name) |

Continuing the handler, we load up the secrets and extract the token and signing_secret on lines 4-6. It’s way faster to fetch a single secret that contains lots of secrets than to fetch them individually. Fear data calls when making lambdas, they’ll slow you down.

On line 10, we register a slack app.

On line 14, we register the bedrock AI client.

On line 17 and 23, we register two handlers for different events. Either a message (DM) or app_mention (@ in slack), and on both, we trigger the handle_event_client function, which has quickly become the main core of logic for this program.

Then on line 30-31, we start up the slack app and process any events, which there should be exactly 1 of, because we only started because there’s 1, and we die immediately after processing the one.

| def lambda_handler(event, context): | |

| ... | |

| # Disambiguate the secrets with json lookups | |

| secrets_json = json.loads(secrets) | |

| token = secrets_json["SLACK_BOT_TOKEN"] | |

| signing_secret = secrets_json["SLACK_SIGNING_SECRET"] | |

| # Register the Slack handler | |

| print("🚀 Registering the Slack handler") | |

| app, bot_name = register_slack_app(token, signing_secret) | |

| # Register the AWS Bedrock AI client | |

| print("🚀 Registering the AWS Bedrock client") | |

| bedrock_client = create_bedrock_client(model_region_name) | |

| # Responds to app mentions | |

| @app.event("app_mention") | |

| def handle_app_mention_events(client, body, say): | |

| print("🚀 Handling app mention event") | |

| handle_message_event(client, event_body, say, bedrock_client, app, token, bot_name) | |

| # Respond to file share events | |

| @app.event("message") | |

| def handle_message_events(client, body, say, req): | |

| print("🚀 Handling message event") | |

| handle_message_event(client, event_body, say, bedrock_client, app, token, bot_name) | |

| # Initialize the handler | |

| print("🚀 Initializing the handler") | |

| slack_handler = SlackRequestHandler(app=app) | |

| return slack_handler.handle(event, context) |

That should be it! Apply your terraform, and then lets go update our slack app to point at our lambda function app URL.

Update Slack App Webhook, Test

Lets run our terraform, and we’ll assume everything worked. If it did, it’ll output something like the below. This is a web address that can receive inbound traffic and process it with your lambda. Copy that URL down.

Do you want to perform these actions? Terraform will perform the actions described above. Only 'yes' will be accepted to approve. Enter a value: yes Apply complete! Resources: 0 added, 0 changed, 0 destroyed. Outputs: lambda_arn = "https://35kxxxxxxxxxxxxxxxxxxxew.lambda-url.us-east-1.on.aws/"Lets head back to your Slack App configuration. You can find your slack apps at this URL:

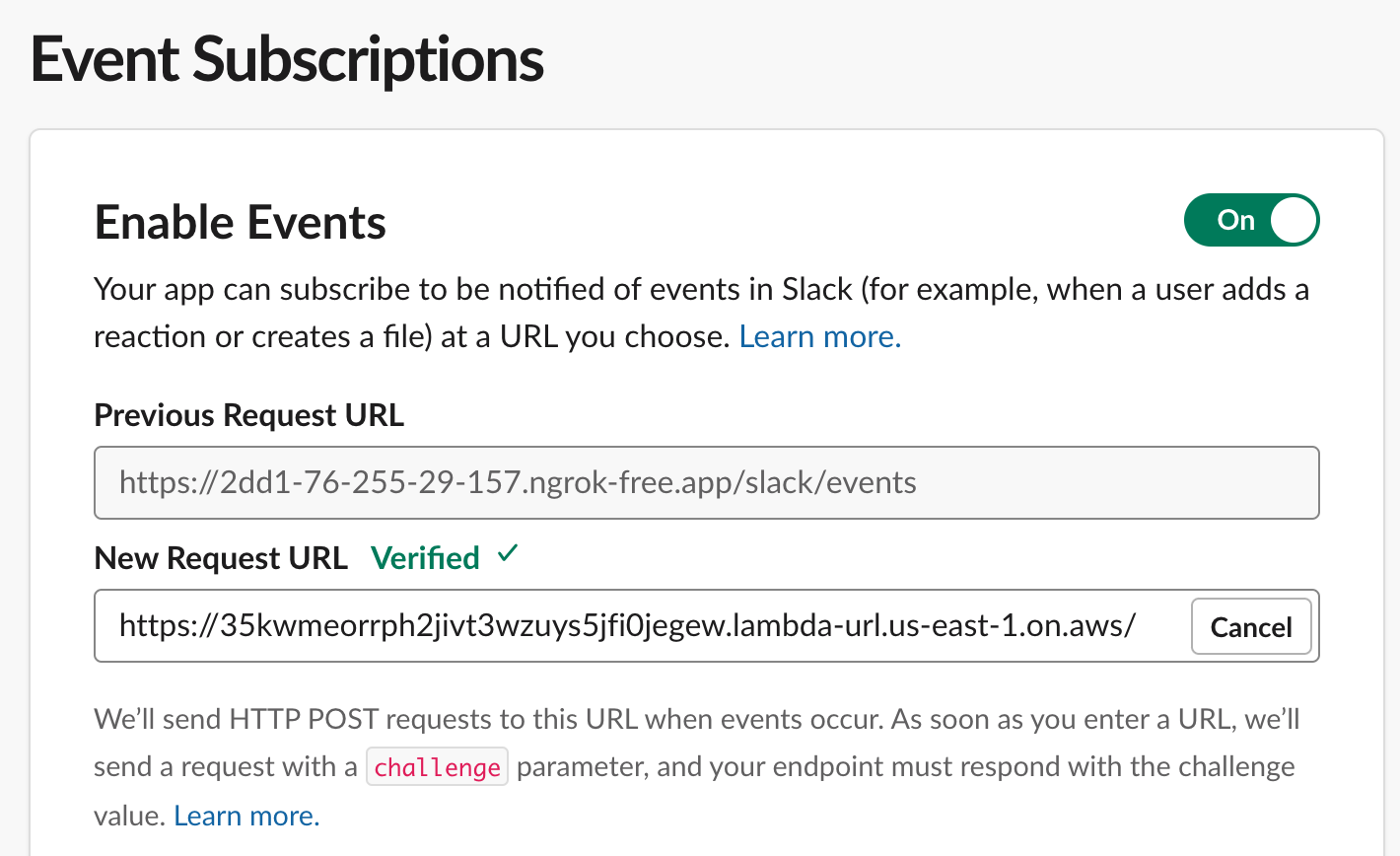

Head over to Features —> Event Subscriptions

You’ll see your ngrok target. Click “Change” on the right, and paste in the lambda URL. Click off that field, and within 3 seconds, you’ll either see “Verified” (yay!) or an error message (boo). If you see an error, go look at your lambda logs, which we’ll discuss next.

If it worked, make sure to hit save!

Lets send a message in our Slack. I’m going to DM the bot directly.

Hopefully after a second, you see your bot responding to you. If so, OMG THATS SO COOL! If not, lets go debug it.

Lambda Debugging

Lets go look at our lambda logs. Head over to your AWS console and to the Lambda service in your region:

https://us-east-1.console.aws.amazon.com/lambda/home?region=us-east-1#/discover

Find your function, and click into it. Click on the Monitor tab, and then View CloudWatch Logs.

At the very top, you’ll see the AWS Parameter Store Lambda Extension start and start listening on tcp/2773 localhost for secrets. We’ll fetch our secret in a few hundred milliseconds.

And near the bottom, you’ll see Lambda printing out the conversation content payload, as well as the conversation user ID.

Look at any messages right before the lambda exits, particularly ones that start with “failed to start listener”, which means there’s some error processing a message. The logs are pretty verbose, and will tell you exactly which permissions are missing, or if some component is missing.

Further Help

Please feel free to open issues or feature requests against the MIT open source codebase:

If you’d like further help, please feel free to message me directly (Paid subscribers only), and I’d love to help!

Summary

There’s lots more to do here, but we’ll treat future features as small bites, rather than this sprawling epic of building an AI chat bot.

In this article, we implemented a Guardrail, Lambda, Lambda Alias, Lambda Function URL, and updated all our python code to support the same.

That was a ton of work!

Then we updated our slack app to point at our new lambda function URL, and test it. In my example, it worked! (yay!). And if it didn’t work, we talked about how you can troubleshoot it.

If you implement this in your environment, I would LOVE to hear about it. Did it work? Is it awesome? Did I miss something important? Please let me know!

Thanks ya’ll. Good luck out there.

kyler