🔥Building a Slack Bot with AI Capabilities - Part 9, Reducing Cost and Complexity with a Lambda Receiver Pattern🔥

aka, respond "received" and then die

This blog series focuses on presenting complex DevOps projects as simple and approachable via plain language and lots of pictures. You can do it!

This article is part of a series of articles, because 1 article would be absolutely massive.

Part 1: Covers how to build a slack bot in websocket mode and connect to it with python

Part 4: How to convert your local script to an event-driven serverless, cloud-based app in AWS Lambda

Part 7: Streaming token responses from AWS Bedrock to your AI Slack bot using converse_stream()

Part 8: ReRanking knowledge base responses to improve AI model response efficacy

Part 9 (this article!): Adding a Lambda Receiver tier to reduce cost and improve Slack response time

Hey all!

Welcome to the final entry into this comprehensive series of how to build and iteratively improve an GenAI-powered slack bot with Lambda and Bedrock. This series has been an incredible journey, and I’m trying to close it out as well as I can by establishing a proper receiver.

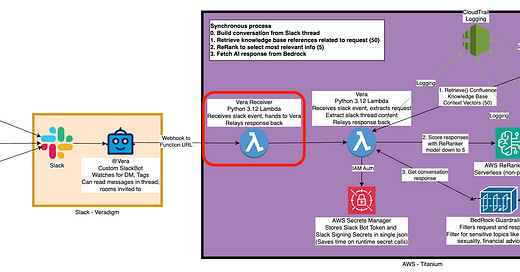

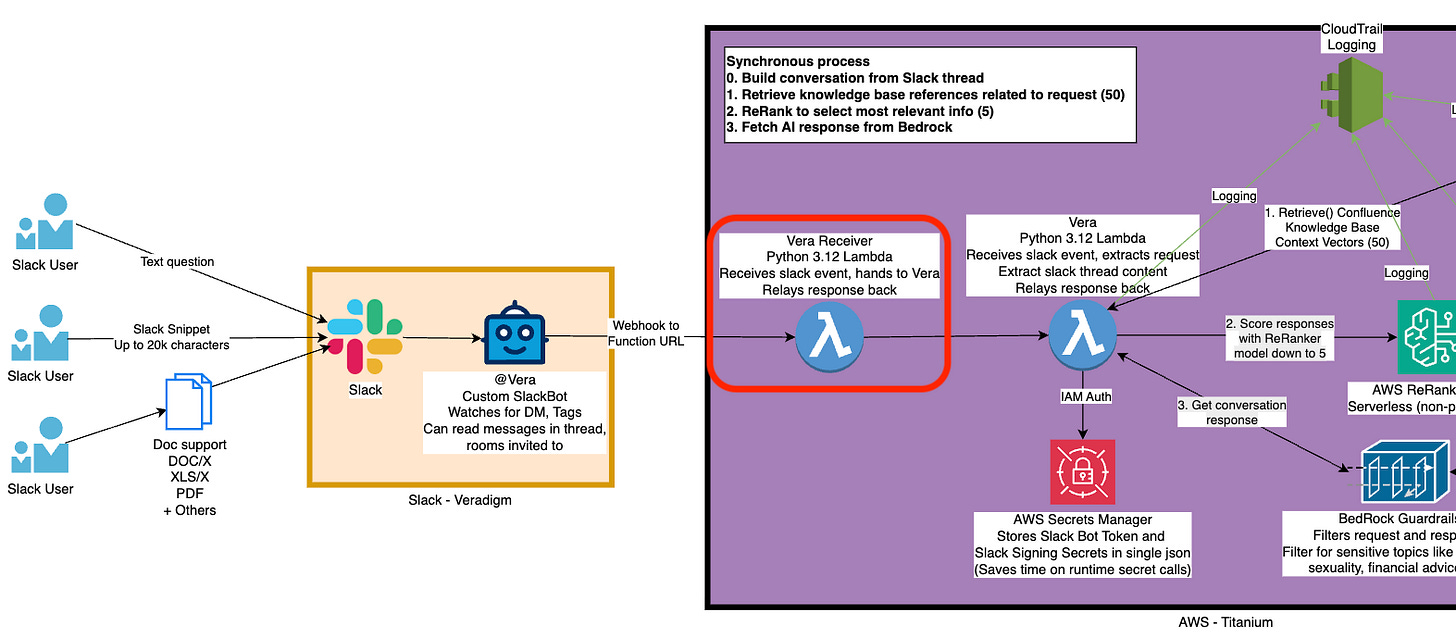

First, lets go over what we talked about. First, we established how to build a Slack Bot, and permissions in Slack, and how to trigger it. Then we talked about what a slack webhook payload looks like, and how to read it and walk over a slack thread to construct a conversation. We do that with lambda, then we convert it all to the .converse() standard API at AWS that fronts all of their models.

We put it all in lambda, then we added a Confluence knowledge base, re-ranking (with .rerank()), guardrails for security and standardization, and got it all working and logging.

That put all that logic into a single lambda, which of course takes longer than 3 seconds to run, which slack’s API considers a failure - it’s just too long! There’s no good way to get around that - async() methods aren’t reliable, and we can’t respond to the slack http session without closing it.

So we establish an asynchronous pattern - we’ll receive the slack webhook on the front-end with a stand-alone lambda called a Receiver, and it’s only job is to hand off the webhook payload to the real worker lambda, and then respond to slack with an A-OK (actually http/200), and then shut down.

That way our lambda can run and take all the time it needs, and slack doesn’t think we’ve failed to receive the webhook.

And it’s surprisingly easy to implement! Lets walk through what our new lambda looks like, and what we can remove from our worker lambda because of that disambiguation.

Keep reading with a 7-day free trial

Subscribe to Let's Do DevOps to keep reading this post and get 7 days of free access to the full post archives.