🔥Let’s Do DevOps: Auto-Approve Safe Terraform Apply in Azure DevOps CI/CD

This blog series focuses on presenting complex DevOps projects as simple and approachable via plain language and lots of pictures. You can do it!

Hey all!

I’ve written a series of blogs about running an Azure DevOps Terraform CI/CD in an enterprise environment (for more info please see my profile). One item my business very much wanted, and which CI/CDs twist themselves up in knots to support is manual approvals for particular stages or steps.

For instance, say we want terraform plan to run automatically, and before terraform approval runs we want the environment owner to approval the run? I worked hard on that, and achieved it with Azure DevOps environments, and walk through the steps here:

Azure DevOps YML Terraform Pipeline and Pre-Merge Pull Request Validation

This blog series focuses on presenting complex DevOps projects as simple and approachable via plain language and lots of pictures. You can do it! tl;dr: Here’s YML code that will build an Azure DevOps pipeline that can be run automatically as part of pull request validation (pre-merge) and requires manual approval by definable admin groups in order to pr…

However, I had a recent addendum to that request: Is it possible for us to require environment approval only if no destructive changes are being made? This request is basically a conditional approver, which is a weakly supported feature in the CI/CDs I’m familiar with.

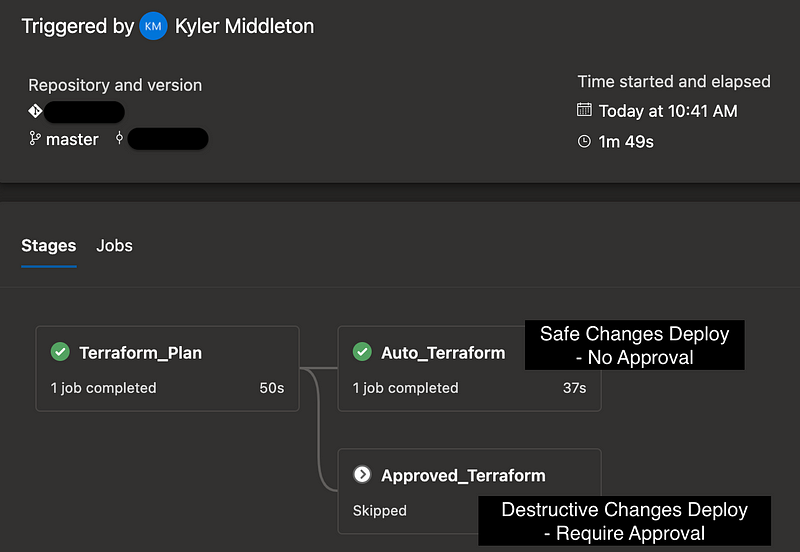

Particularly with how environments (the method we use for manual approval in Azure DevOps) can only live at the stage level, and not at the task or job level, means this is a more complicated task than it seems. I ended up duplicating the apply stage exactly, with one referencing a gated approval environment (for those potentially destructive changes) and one not requiring a gating approval (for those changes deemed safe).

This change would establish real Configuration Deployment where PR merge == Terraform deployment

Given the focus on identifying and reducing friction for safe changes, I also added CI/CD triggers so that when code is merged against our master repo, the deploy is automatically queued, and if the change is classified as safe, automatically deployed without any further interaction.

This pattern will reduce manual approvals by around 80%, and will permit large-scale concurrent deployment for changes deemed safe to a large number of environments, permitting managing environments with little friction at scale. This helps our ops team manage environments, and helps our app teams manage their apps.

Let’s talk concepts and how we’re using them here.

Concepts & Execution

Environments & Approvals

Azure DevOps uses environments to track runs. Pipeline stages can reference a specific environment where the job is run. This can be a literal environment, such as a K8s stack, or a “logical environment”, such a specific group’s owned resources.

Approvals can be set on environments, which means the CI/CD run will pause until an approval is met. This jobs won’t queue, so if 10 jobs are waiting, and job number 8 receives an approval, it will be worked immediately. This makes it a great for for controlling and authorizing risky workflows, like IaC approval flows.

In my previous blogs I talked about using a gating “approved” environment for all terraform apply. In this blog we’ll update our logic:

Deployment pipeline run automatically at merge time,

terraform plangenerated and savedRead the

plandocument, use grep to identify “safe” pattern, and set variable.If “safe” variable set, deploy without approval.

If “safe” variable not set, use stage with approved environment. Pause until approval granted, then proceed.

Azure DevOps Work Units: Stages, Jobs, Tasks

Stages are utilized only when pipeline work needs to be grouped into units and executed in a controlled way. For instance, stages can be targeted at different runners, or executed at different times. Stages are a grouping of jobs.

Jobs are are a group of work to be run on a single host, at a single time. Once a job is triggered, it will run as a unit. Jobs are comprised of ordered tasks.

Steps or Tasks are individual instructions. For instance, download this file, run this command, execute this discrete work unit. They are specific and prescriptive.

| stages: | |

| - stage: Terraform_Plan <-- This is a stage definition | |

| jobs: | |

| - deployment: Terraform_Plan <-- This is a job definition | |

| displayName: Terraform_Plan | |

| pool: $(pool) | |

| workspace: | |

| clean: all | |

| continueOnError: 'false' | |

| environment: 'TerraformPlan_Environment' | |

| strategy: | |

| runOnce: | |

| deploy: | |

| steps: | |

| - checkout: self <-- This is a task definition |

These groups of work are important to understand because of Azure DevOps limitations on some of them. For instance, environments that we talked about above are powerful, but can only be targeted at stages. Which is a huge bummer — if we could target environments are tasks, I could create two tasks, like this (changes highlighted):

- task: Bash@3

displayName: 'Terraform Auto Apply'

conditional: eq(dependencies.Terraform_Plan.outputs['Terraform_Plan.Terraform_Plan.AutoApprovalTest.approvalRequired'], 'false')

inputs:

targetType: 'inline'

script: 'terraform apply $(tf_apply_command_options)'

workingDirectory: $(System.DefaultWorkingDirectory)/$(tf_directory)

failOnStderr: true- task: Bash@3

displayName: 'Terraform Apply with Approval'

conditional: eq(dependencies.Terraform_Plan.outputs['Terraform_Plan.Terraform_Plan.AutoApprovalTest.approvalRequired'], 'true')

environment: TFApprovalEnvironment

inputs:

targetType: 'inline'

script: 'terraform apply $(tf_apply_command_options)'

workingDirectory: $(System.DefaultWorkingDirectory)/$(tf_directory)

failOnStderr: trueHowever, since environments can only target stages or jobs, we’re left to duplicate the entire job definition, which contains all the “install this tool” and “download the code” steps.

If this isn’t the case, please message me how you’ve got this working! I’ve love to fix it in Azure DevOps. And I imagine at least some of the other CI/CDs are capable of this task-level targeting, which will make your pipelines cleaner and easier to read and maintain.

CI Triggers

CI triggers are (confusingly) enabled by default on pipelines, so you’ll likely have been affected by them if you’ve built any pipelines. They control if and how pipelines are triggered automatically by repo actions.

If you don’t configure this at all on a Azure DevOps YAML pipeline, CI triggers will cause this pipeline to execute any time any change is made to the repo, which is very very likely to not be what you expected (or want).

A great idea when you’re first building these is to set this block. This will disable any CI triggers. You’ll still be able to run the pipeline manually, but it won’t trigger automatically and potentially break things.

# Disable CI Triggers

trigger: noneYou can use this to your advantage though. We can add filters so that our pipeline is kicked off automatically on specific git branches, or with specific paths. If you do, it’ll look like this:

trigger:

branches:

include:

- master

paths:

include:

- path/to/terraform/filesThis would cause the pipeline to execute automatically only when code is committed to the master branch (via direct push or, more likely, PR merge) and only when files in the tree path/to/terraform/files/** recursively are updated. This gives us a ton of control over how our pipelines run and when.

Test if Terraform Change is Safe

This whole pattern of selectively automatically deploying terraform requires we somehow programmatically figure out if a terraform change is safe. This can get quite complicated, but there are some simple options. Terraform itself has a concept where it’s exit code can be set to different values if there is an empty change set vs a change set that will modify resources.

However, that isn’t specific enough for us — we want to figure out if a change is safe, not just if a change of any kind will be made. I couldn’t find a good way to do this with existing tooling so I wrote some bash (I’m a huge fan of bash utility scripts).

Immediately before this, we run a terraform plan -out plan.out, which seeds this file that we can read. Terraform “show” has a few responses depending on whether a change is detected:

“ 0 to add, 0 to change, 0 to destroy” — Note the leading space, that’s intentional. If we didn’t have the space we’d pick up “10 to add” as a no-op change.

“to change, 0 to destroy” — Is checked next. If any number of changes, but no destroys, we set a variable to trigger the auto-approval stage without any approvals.

Any other result means we’re destroying at least 1 resource, and the environment owner should be required to approve, so we set approvalRequired to true.

| # If no changes, no-op and don't continue | |

| if terraform show plan.out | grep -q " 0 to add, 0 to change, 0 to destroy"; then | |

| echo "##[section]No changes, terraform apply will not run"; | |

| # Check if resources destroyed. If no, don't require approval | |

| elif terraform show plan.out | grep -q "to change, 0 to destroy"; then | |

| echo "##[section]Approval not required"; | |

| echo "##[section]Automatic terraform apply triggered"; | |

| echo "##vso[task.setvariable variable=approvalRequired;isOutput=true]false" | |

| # Check if resources destroyed. If yes, require approvals | |

| else | |

| echo "##[section]Terraform apply requires manual approval"; | |

| echo "##vso[task.setvariable variable=approvalRequired;isOutput=true]true" | |

| fi |

This method is custom, but that means it’s flexible. If there’s a certain number of changes you’re comfortable with, you can do it. If you want to grep the file for specific resources that you want to permit changes to, go for it. The end result just has to be to set a variable here so it can be monitored and triggered on the next stages “conditions” block.

Stage Conditionals

Azure devops allows us to configure Release Pipelines in a graphical GUI, which is a wonderful way to get started. However, when you want to start doing cool and enterprise-level stuff, you’re probably going to want to write your pipelines in YAML. This permits lots more cool stuff, including the conditionals we’ll talk about now.

Conditionals are a bit self-explanatory. They control whether a stage / job / task should run based on “eq” (Equal to) or “ne” (Not Equal to) tests.

They look like this:

condition: |

and

(

succeeded(),

ne(variables['Build.Reason'], 'PullRequest')

)If the conditionals block is missing from a stage, it will run every time (as long as the preceding stage completed successfully).

For our specific use case, we want to set a variable in stageA (Terraform Plan) and use the output of that variable to trigger one of two terraform apply stages. One of those stages doesn’t have an “environment”, so it doesn’t require approvals. The other does have an environment, so it requires approvals.

We’ll use these two latter stages in this way:

For changes that are

safe(approvals or changes only), we’ll run the stage without approvals, e.g. — automatically, without pausing.For changes that are

destructive(destroy at least one resource), we’ll run the stage with approvals, e.g. pause and wait for environment approval.

This maps very well to business processes generally, where safe changes should be rolled out right away, and unsafe changes should be schedule and environment owners should be aware of them, and specifically authorize them to roll out. Here’s how the conditional looks for stageB, the one that rolls out automatically:

condition: |

and

(

succeeded(),

ne(variables['Build.Reason'], 'PullRequest'),

eq(dependencies.Terraform_Plan.outputs[‘Terraform_Plan.Terraform_Plan.AutoApprovalTest.approvalRequired’], ‘false’)

)Summary

And that’s it! When we tie all those together, we have automatic deployment of Terraform based on whether a change is classified as safe or not. If you’d like to go build it, here is a full code copy of what we’re doing, and you should be able to use this to roll it out in your own env.

KyMidd/AzureDevOps_TF_AutomatedTestAndDeploy

Contribute to KyMidd/AzureDevOps_TF_AutomatedTestAndDeploy development by creating an account on GitHub.github.com

UPDATE: I had several folks ask if they could add exception logic for specific resource types, and I have added it. More details here:

Let’s Do DevOps: Resource-Level Automated Terraform CI/CD Approvals

This blog series focuses on presenting complex DevOps projects as simple and approachable via plain language and lots of pictures. You can do it! Hey all! I wrote a blog entry recently about a desire in my company to automate review and approval of terraform changes. I started out with really simple logic:

Please go forth and build cool DevOps stuff. Let’s do this.

Good luck out there!

kyler