🔥Let's Do DevOps: Azure Dynamic Scopes for Maintenance Configs Across Subscriptions🚀

aka, updating all the VMs in `n` subscriptions from a single pane of glass

This blog series focuses on presenting complex DevOps projects as simple and approachable via plain language and lots of pictures. You can do it!

Hey all!

When you’re talking about patching your (particularly Windows) virtual machines in Azure, all roads lead to the Azure Update Manager, the Azure-native tooling that operates an agents on your machines, repos on update status, and permits deploying updates from the console in an automated way.

The Azure Update Manager, in many ways, rules. It’s flexible, it’s powerful, it’s easy to use - at least in the GUI. And that’s the biggest problem, for this and many other Azure functionalities - they’re built for GUI management in the web portal. Management via CLI, or via tools that use the CLI, like Terraform, are a second thought at best.

And so, we take me back to a few weeks ago, when I offered to build Terraform to automate the Maintenance Configs and Dynamic Scopes across a half dozen subscriptions, for a team of ours for the first time. I’m pretty sure I said I’d get it done in an hour. How wrong I was 😂.

Before we get too far, let’s define some terms:

Terrform/Tofu (TF) Provider - An API instruction book, basically. Tells the TF core binary how to manage resources for a specific platform, in this case, Azure.

Maintenance Configuration - Controls everything about the Patches to install on the OS. The packs to include, when to install them, which ones to include vs exclude, stuff like that.

Maintenance Dynamic Scope - Controls everything about which servers to add to a particular maintenance configuration. Can be specified individually (serverA, serverB, etc.) or via a dynamic scope which is a filter against a subscription by tags or other attributes.

Okay, let’s build some cool stuff. Scroll to the very end for a link to the gist with all code.

Terraform Across Subscriptions

Azure has some wonky behaviors for resources across subscriptions - for instance, when a Firewall wants to use a Firewall Policy, you’d think you’d just need the ability to Read the Firewall Policy from Azure so it can find the ID and instructions in the Policy. Nope, it needs the ability to Write to the policy so it can associate the resources together. That’s very different from how AWS works.

Generally in AWS, how the portal works is the *exact* same way the CLI works. (I love AWS, can you tell?). In this case, the way the resource works from the CLI/terraform is very different from the portal.

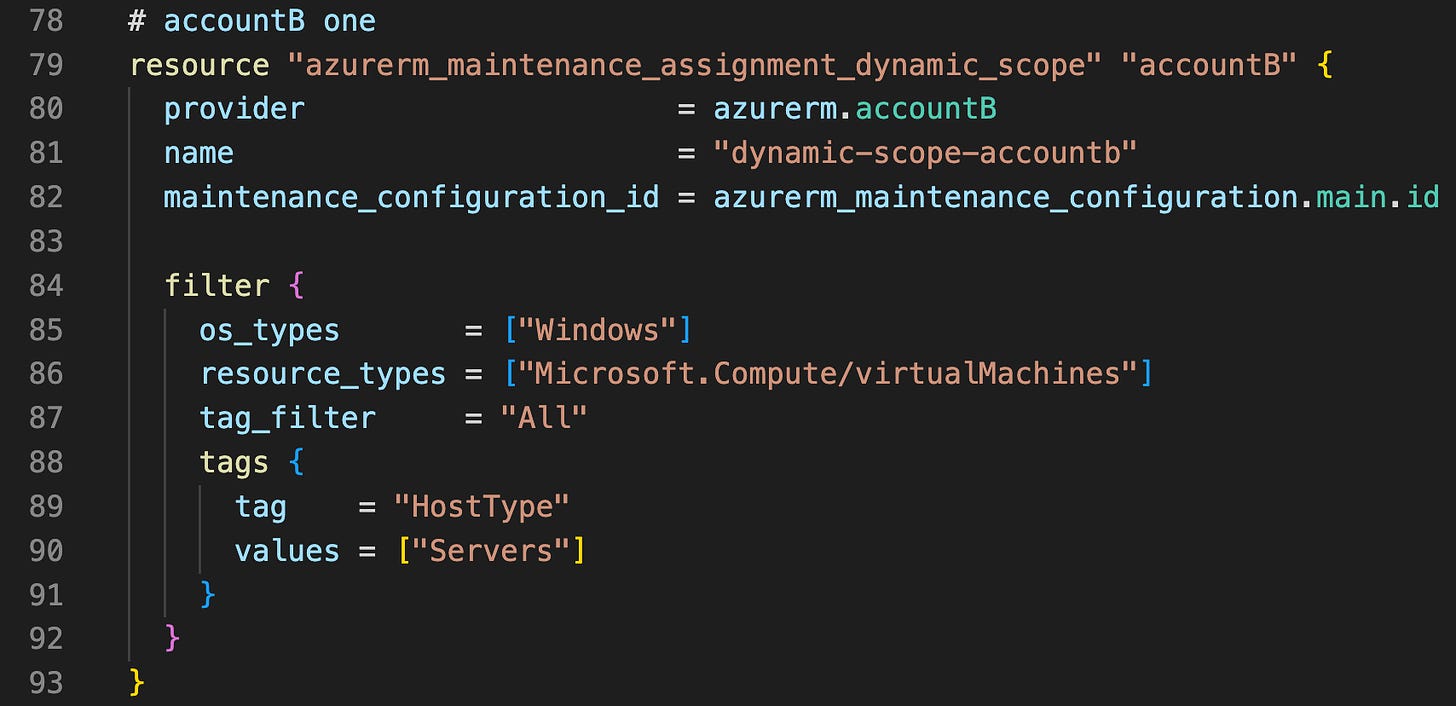

Here’s what a dynamic scope looks like in the Azure Portal - you’d assume that there will be a single Terraform resource and you can pass it a list of subscription IDs and maybe a tag to filter by, right?

You’d be wrong. The GUI is doing a ton of work for us - when you check the box next to a subscription, it’s building a resource in that subscription to find servers based on the Filter. Which is really cool, but means we have to do some extra work on the CLI/Terraform side.

Let’s start with our global provider config - we tell TF to install the AzureRM provider. Simple. Heck yeah.

| terraform { | |

| required_providers { | |

| azurerm = { | |

| source = "hashicorp/azurerm" | |

| } | |

| } | |

| } |

Next we register our Providers (and yes, that plural is right). If you’ve only ever done TF pointed at a single Subscription, this will feel super weird. We have the normal registration on line 2, which is pointed at the Default Subscription for the Managed Identity (MI) or other Service Principal (SP).

Pro tip: Any resources in this terraform workspace that start with “azurerm” will by default use the “azurerm” provider that doesn’t use an alias. The AzureRM Provider with an alias is only used when explicitly stated.

Then on line 7 we register another instance of the AzureRM provider, except this time we point it at a specific subscription. This will still use the MI/SP’s identity, but it will attempt to do things in that other subscription when you tell it to.

| # Main | |

| provider "azurerm" { | |

| features {} | |

| } | |

| # accountB | |

| provider "azurerm" { | |

| features {} | |

| alias = "accountB" | |

| subscription_id = "xxxxx-yyyyy-zzzzzz-00000000" | |

| } |

Which Patches? When Patches? Why Patches?

Then we build a resource group (boring).

| resource "azurerm_resource_group" "rg" { | |

| name = "rg_name" | |

| location = "East US" | |

| } |

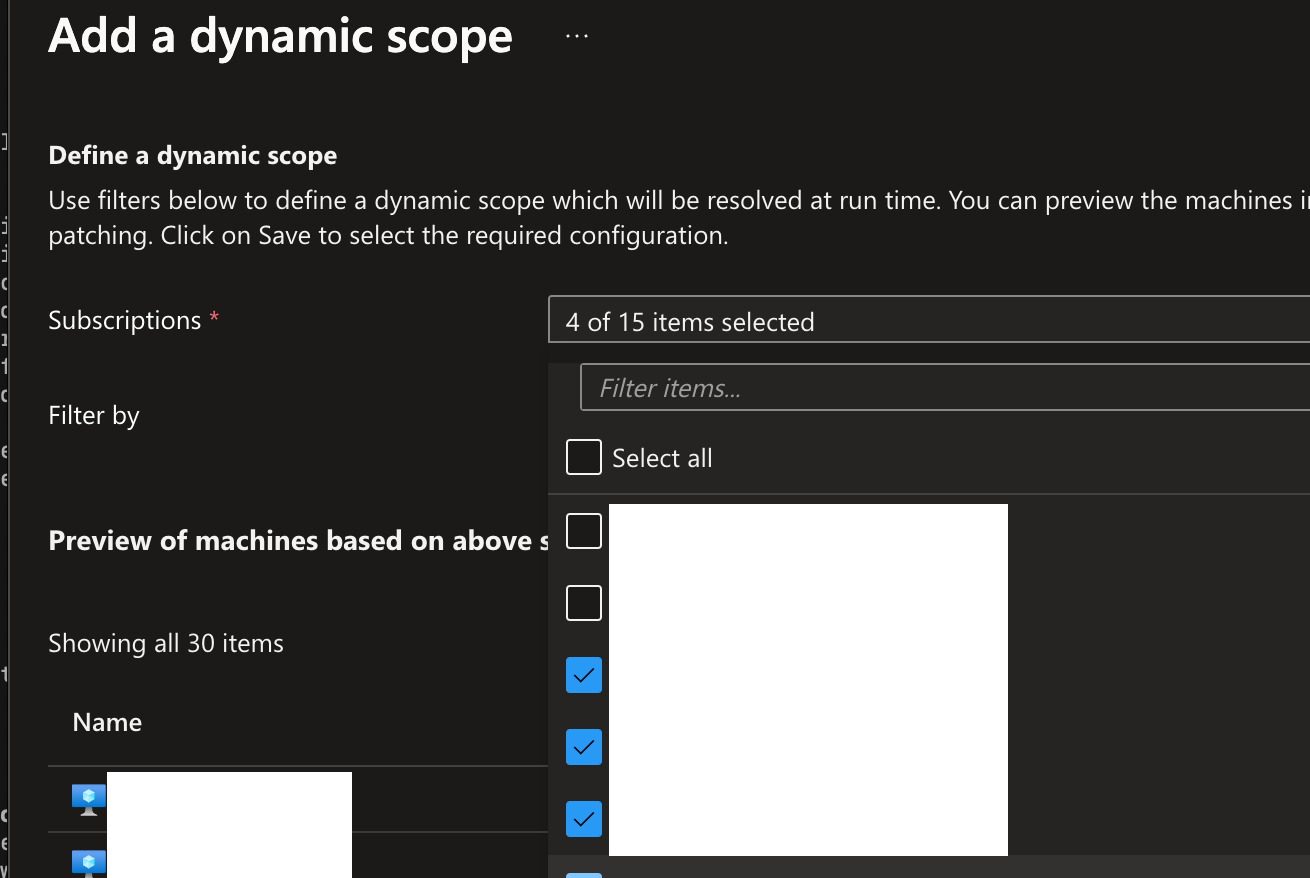

Next up gets really interesting - the Maintenance Config, in its entirety. Normal resource stuff until line 12 - we can specify 0 or more windows, which specify when patches should be installed on our host. On line 13 we specify a start date, and since we want the patches to start rolling out the next time the window rolls around, we specify the current time as the “start date”. Note this isn’t to say patches will start rolling out now (unless the window you specify does indeed fall when you deploy).

Then on line 14 and 15, we specify the timezone and duration of the window. Any patches not able to be installed within that window will be marked as pending in the console.

Line 16 isn’t specified with much detail in the docs page. I initially tried to build this in cron, and it kept failing with these vague error messages.

I eventually found in the VM Powershell library, a reference to some examples. There’s lots of ways to specify the recurrence, which is both flexible and frustratingly non-prescriptive of how you should be doing this.

On line 20, we tell it to always reboot hosts to install patches (I don’t want to click into 1k+ machines to hit a reboot button, do you?), and on line 23 we tell it which patches to include. On line 32, we tell TF to ignore the start date so it doesn’t update that field on every run.

| resource "azurerm_maintenance_configuration" "main" { | |

| name = "main" | |

| resource_group_name = azurerm_resource_group.rg.name | |

| location = azurerm_resource_group.rg.location | |

| scope = "InGuestPatch" | |

| in_guest_user_patch_mode = "User" | |

| tags = { | |

| GuiltyParty = "Kyler" | |

| } | |

| window { | |

| start_date_time = "${formatdate("YYYY-MM-DD", timestamp())} 23:00" | |

| time_zone = "Eastern Standard Time" | |

| duration = "03:55" # 3 hour, 55 min | |

| recur_every = "Month Second Tuesday" | |

| } | |

| install_patches { | |

| reboot = "Always" | |

| windows { | |

| classifications_to_include = ["Critical", "Security", "UpdateRollup", "ServicePack", "Definition", "Updates"] | |

| kb_numbers_to_exclude = [] | |

| kb_numbers_to_include = [] | |

| } | |

| } | |

| # Add ignore for window.start_date_time | |

| lifecycle { | |

| ignore_changes = [ | |

| window[0].start_date_time | |

| ] | |

| } | |

| } |

Now we have a good selection of when patches will be installed, and what patches will be installed. But it notably doesn’t point at any servers. That’s what we build next.

Select a Server (Or All The Servers)

To pick out servers, we build an azurerm_maintenance_assignment_dynamic_scope resource. It’s a relatively recent addition via this PR on May 6, 2024. It permits building a query based on a whole bunch of information, see the pic.

The really interesting one here is the `tag_filter` attribute. You can set it to match servers that match Any (any single one) of the filters, or All (every single one) of the filters you define.

So you can set several different filter blocks, and then set what type of logic to use for combining them. Pretty powerful stuff.

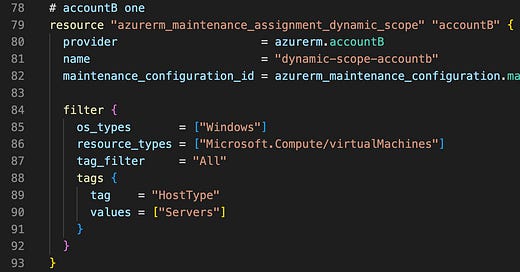

How it looks in terraform is pretty simple. On line 2 we define a name (notably not present in the Azure Portal), and on line 3 we link it to a particular maintenance configuration.

Then on line 5 we define our filters. In this example in our filter block we specify we just want windows servers (line 6), of just type Virtual Machines (line 7), and we specify we want to match All the filters (line 8, of which there’s just one).

And then we define a tag filter on line 9, where we’re looking for a tag named HostType (line 10), with value “Servers” (line 11). Note that line 11, values is a list, not a string. So you can provide a whole list of values that the tag can have and still match that particular filter. We’re not using it here, but super cool stuff you can use in your own implementation.

This dynamic scope implicitly can only find servers in the subscription it’s built in, and since we didn’t specify any provider (linked to a subscription), it’s looking in the default subscription only.

| resource "azurerm_maintenance_assignment_dynamic_scope" "accountA" { | |

| name = "dynamic-scope-accounta" | |

| maintenance_configuration_id = azurerm_maintenance_configuration.main.id | |

| filter { | |

| os_types = ["Windows"] | |

| resource_types = ["Microsoft.Compute/virtualMachines"] | |

| tag_filter = "All" | |

| tags { | |

| tag = "HostType" | |

| values = ["Servers"] | |

| } | |

| } | |

| } |

But we want to find servers that live in different subscriptions, which means we need to build the exact same resource, but this time specify the non-default provider, so that it can find VMs in a different subscription.

Pro Tip: This (annoyingly) has to be explicitly defined, and not iterated over with a for_each because Terraform doesn’t yet allow you to interpolate provider names. If you’re using Tofu (and you should be, it’s amazing), you can interpolate provider names, and you could build this resource using iterative patterns.

To build a resource in a different subscription, you need to tell the resource to use a provider registered to that subscription. On line 2, we tell the resource to use the “provider” (this is a global attribute, you won’t find it in the resource’s info page) of type “azurerm” with alias “accountB” with string “azurerm.accountB”.

The rest of the configuration is entirely the same.

| resource "azurerm_maintenance_assignment_dynamic_scope" "accountB" { | |

| provider = azurerm.accountB | |

| name = "dynamic-scope-accountb" | |

| maintenance_configuration_id = azurerm_maintenance_configuration.main.id | |

| filter { | |

| os_types = ["Windows"] | |

| resource_types = ["Microsoft.Compute/virtualMachines"] | |

| tag_filter = "All" | |

| tags { | |

| tag = "HostType" | |

| values = ["Servers"] | |

| } | |

| } | |

| } |

And boom, we’ve got a single Maintenance Config in our default subscription, and it’s applying its policies to VMs across several different subscriptions.

Summary

In this article we walked through how provider registration works, and how to register providers in different subscriptions. We talked about the behavior of providers with and without aliases. We also build a Maintenance Config to control patching in a single location, and linked it to Dynamic Scopes that are build in different subscriptions, and all linking back to the single Maintenance Config.

A complex gist of all the code we worked on is here:

https://gist.github.com/KyMidd/f39cc443af05f613d6f45fecf2e69372

Hope it helps you build some incredible stuff!

Good luck out there.

kyler