🔥An Intro to Bootstrapping AWS to Your Terraform CI/CD

This blog series focuses on presenting complex DevOps projects as simple and approachable via plain language and lots of pictures. You can do it!

Pairing Terraform with a CI/CD like Azure DevOps, Terraform Cloud, or GitHub Actions can be incredibly empowering. Your team can work on code simultaneously, check it into a central repo, and once code is approved it can be pushed out by your CI/CD and turned into resources in the cloud.

When you start rolling this out, you run into an immediate catch22 — you need an S3 bucket for TF state, and a DynamoDB for state locking to run terraform, but you need to run terraform in order to build these resources.

The best method I’ve thought of to get around this problem I’m calling “pivoting”. The basic order is:

Run terraform from your local machine, and build the S3 bucket, DyanmoDB table, and any other bootstrap items you need.

Tell terraform to use the s3 bucket and DyanmoDB table, and push your local .tfstate to the remote storage.

Upload your terraform to the CI/CD, where it can access its state file and start building other cool things.

Let’s walk through the steps, and you’ll have an AWS account bootstrapped into your CI/CD before you can say “terraform can do that?”

AWS IAM User — Authorization

Before we can do anything with terraform, we need to authenticate. The simplest way to do that is to build an IAM user in AWS.

First, jump into AWS and type IAM into the main page console, then click on the IAM dropdown.

Click on “Users” in the left column, then click on “Add user” in the top left.

Name your user. It can be anything, but it’s helpful to have it named something you recognize as a service account used for this Terraform Cloud Service. Terraform can only do what the IAM user can do, and we’re going to make it a global administrator within this account, so I like to include “Admin” in the same so I remember not to share the access creds.

Also, check the “Programmatic access” checkbox to build an Access Key and Secret Access Key that we’ll use to link TF to AWS, then hit “Next: Permissions” in the far bottom right.

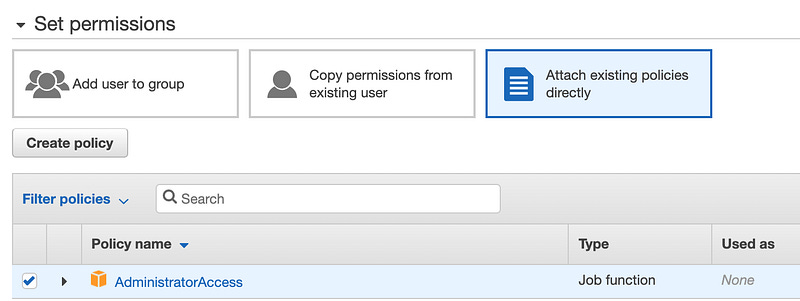

This user now exists, but can’t do anything. For a more in-depth look at IAM (and it does go deep), refer to the same earlier blog about assuming an IAM role. To grant it permissions, we can either create a custom policy with specific and limited permissions, or we can link to existing policies. For the sake of this demo, we’ll use “AdministratorAccess”, but remember this step as another opportunity for extra security in a real enterprise environment.

Hit Next Tags in the bottom right, then Next Review. If the Review page looks like the below, hit “Create user” in the bottom right, and we’re in business.

You’re presented with an Access Key ID and a secret access key (behind the “show” link). You’ll need both of these, so don’t close this page.

Export that info to your terminal using this type of syntax:

| export AWS_ACCESS_KEY_ID=your_access_key | |

| export AWS_SECRET_ACCESS_KEY=your_secret_access_key | |

| export AWS_DEFAULT_REGION="us-east-1" |

Now your local terminal can run terraform will full administrative permissions in your account, which is great for our bootstrapping efforts.

Terraform Bootstrap Code

Now that our terminal is ready to run Terraform code, we need some code to run. Remember, what we’re hoping to do is build an S3 bucket (for TF state storage) and a DynamoDB table (for TF state locking).

To make things super easy, I wrote a bootstrap Terraform script and stashed it here: https://github.com/KyMidd/Terraform_CI-CD_Bootstrap

First, we’ll establish our providers. Note that the backend is currently commented out — that’s by design — we need to build the backend before enabling it! But we’ll turn it on soon enough.

| # Require TF version to be same as or greater than 0.12.13 | |

| terraform { | |

| required_version = ">=0.12.13" | |

| #backend "s3" { | |

| # bucket = "your_globally_unique_bucket_name" | |

| # key = "terraform.tfstate" | |

| # region = "us-east-1" | |

| # dynamodb_table = "aws-locks" | |

| # encrypt = true | |

| #} | |

| } | |

| # Download any stable version in AWS provider of 2.36.0 or higher in 2.36 train | |

| provider "aws" { | |

| region = "us-east-1" | |

| version = "~> 2.36.0" | |

| } |

Then we call the bootstrap module. This isn’t all in the main.tf by design — you can build on your own modules in the future if you’d like. The items on the right are strings, feel free to change them. The one you definitely need to change is the “your_globally_unique_bucket_name”. That one has to be… you guessed it, globally unique.

| module "bootstrap" { | |

| source = "./modules/bootstrap" | |

| name_of_s3_bucket = "your_globally_unique_bucket_name" | |

| dynamo_db_table_name = "aws-locks" | |

| iam_user_name = "IamUser" | |

| ado_iam_role_name = "IamRole" | |

| aws_iam_policy_permits_name = "IamPolicyPermits" | |

| aws_iam_policy_assume_name = "IamPolicyAssume" | |

| } |

We build an S3 bucket first:

| resource "aws_s3_bucket" "state_bucket" { | |

| bucket = var.name_of_s3_bucket | |

| # Tells AWS to encrypt the S3 bucket at rest by default | |

| server_side_encryption_configuration { | |

| rule { | |

| apply_server_side_encryption_by_default { | |

| sse_algorithm = "AES256" | |

| } | |

| } | |

| } | |

| # Prevents Terraform from destroying or replacing this object - a great safety mechanism | |

| lifecycle { | |

| prevent_destroy = true | |

| } | |

| # Tells AWS to keep a version history of the state file | |

| versioning { | |

| enabled = true | |

| } | |

| tags = { | |

| Terraform = "true" | |

| } | |

| } |

Then we build the DyanmoDB table:

| resource "aws_dynamodb_table" "tf_lock_state" { | |

| name = var.dynamo_db_table_name | |

| # Pay per request is cheaper for low-i/o applications, like our TF lock state | |

| billing_mode = "PAY_PER_REQUEST" | |

| # Hash key is required, and must be an attribute | |

| hash_key = "LockID" | |

| # Attribute LockID is required for TF to use this table for lock state | |

| attribute { | |

| name = "LockID" | |

| type = "S" | |

| } | |

| tags = { | |

| Name = var.dynamo_db_table_name | |

| BuiltBy = "Terraform" | |

| } | |

| } |

Copy that code (cloning the repo is the easiest method), then get there in your terminal. Run “Terraform init” to initialize the code and make sure terraform is ready to go.

Then run a “terraform apply”. You should see that terraform will create 2 resources, and do nothing else. If it all looks good, type “yes” at the prompt and Terraform will build our resources.

Now that our bootstrap is ready, let’s push our local .tfstate file to it. The first step is to uncomment the remote state backend in our main.tf file. It’ll look like this, but with your bucket name:

| terraform { | |

| required_version = ">=0.12.13" | |

| backend "s3" { | |

| bucket = "kyler-github-actions-demo-terraform-tfstate" | |

| key = "terraform.tfstate" | |

| region = "us-east-1" | |

| dynamodb_table = "aws-locks" | |

| encrypt = true | |

| } | |

| } |

Then do another “terraform init”. This time it’ll look a little different, as terraform realizes a remote backend has been added. Enter “yes” to copy your local .tfstate file to the remote backend.

Now for the REALLY cool stuff

Great, now we have all the items done we need to build our CI/CD and integrate it with AWS.

For specifics on how to build them out, check out some of these blogs:

Good luck out there!

kyler