🔥Let’s do DevOps: Build an Azure DevOps Terraform Pipeline Part 2/2

This blog series focuses on presenting complex DevOps projects as simple and approachable via plain language and lots of pictures. You can do it!

Hey all!

In part 1 of this series, we:

Learned several DevOps and Azure Cloud terms

Signed up for an Azure Cloud and Azure DevOps (ADO) account

Created an Azure Cloud Service Connection to connect Azure Cloud and ADO

Initialized a new git repo in ADO

Installed git on our machine (if it didn’t have it already)

Created an SSH key and associated it with our user account

Cloned our (mostly empty) git repo to our computers

Phew! That was a heck of a post. In this post, we’ll get to do all the cool stuff our prep work from last time enabled. We’re going to create a build and release terraform pipeline, check in code, permit staged deployments to validate what steps are going to be taken and approve them, then push real resources into our Azure Cloud from our terraform scripts. Let’s get started.

New Scary Terms Part Deux

Before we walk the walk, let’s learn to talk the talk.

Azure DevOps (ADO): A Continuous Integration / Continuous Deployment tool, it will be the tool which executes our automation and actually “runs” the Terraform code.

Git terminology

Master branch: The shared source of truth branch where finished code is committed. Usually code is iterated on in branches, and only “merged” into the master when it is ready.

Branch: When you want to make changes to a repo, you can push changes directly to the master. But you probably need some time to iterate on your code, and if many people are doing this simultaneously, the result is… chaos. To solve this problem, git has the concept of “branches” which is your own private workspace. You make any changes you want, push the code to the server as much as you like, and you won’t affect others until it’s time to merge the code.

Commit: Each time you make some changes to your code in a branch and want to save the code back to the server, you create a “commit”. Generally descriptive notes are attached to commits so other team members know what you changed in each commit.

Pull Request: When a branch is ready to be merged into the master branch, we create a “pull request.” This is a collection of commits that generally notifies the rest of your team that your changes are ready to be evaluated, commented on, and ultimately approved. Once approve, the code is ready to be merged.

Merge: When a pull request is ready, the code is “merged” into the master. Others will “pull” the code to sync their local repo to the master, and work from there. This keeps all code in sync.

ADO Build Pipeline: ADO has several different automation pipelines. A build pipeline fetches code from wherever it is stored and stages it where it can be consumed by “deployment” pipelines. This sounds (and is) boring when the code is stored locally in ADO, but build pipelines are capable of fetching code from all sorts of unlikely places, including GitHub, private git repos, public repos, etc.

Artifacts: When ADO runs a build pipeline to collect code, it puts it in a staging area and calls it an “artifact.”

ADO Release Pipeline: These automation pipelines run the code that is staged as artifacts and can execute it in containers, and do just about any other automated action you can think of. These actions are arranged as pre-built tiles that can be dragged into sequence for an easy and graphical automation build experience.

Finally, Let’s See Some Terraform

Terraform is a descriptive language based on HCL programming language and is intended to describe an end-state of your infrastructure. You say, “I want a server that looks like this” and Terraform’s job is to work with a cloud API to make it happen. Terraform supports many different integrations, which Terraform docs call “providers”. This includes things you’d expect like AWS Cloud, Azure Cloud, Google Cloud Platform, but also others you wouldn’t expect MySql, F5, and VMware.

Here’s a sample script provided by HashiCorp to get Terraform started:

provider “azurerm” {

}

terraform {

backend “azurerm” {}

}

resource “azurerm_resource_group” “rg” {

name = “testResourceGroup”

location = “westus”

}Find the location on your computer where you synced the git repo to. Create a folder called “terraform” and save the above script into it as main.tf.

It’s also a good idea to create a “.gitignore” (including the leading period) file in your repo. The recommended one is stored here, and looks like this:

# Local .terraform directories**/.terraform/*# .tfstate files*.tfstate*.tfstate.*# Crash log filescrash.log# Ignore any .tfvars files that are generated automatically for each Terraform run. Most# .tfvars files are managed as part of configuration and so should be included in# version control.## example.tfvars# Ignore override files as they are usually used to override resources locally and so# are not checked inoverride.tfoverride.tf.json*_override.tf*_override.tf.json# Include override files you do wish to add to version control using negated pattern## !example_override.tf# Include tfplan files to ignore the plan output of command: terraform plan -out=tfplan# example: *tfplan*Time to Git Moving

Once saved, go to the location of your repo in your command line and type “git status”. You’ll see a few interesting items:

1. We’re on branch master

2. Git recognizes there’s a new “untracked” file we can add

So let’s add it. Type “git add .” (git add period) and then “git status” again, and let’s see if it’s changed. Yeah, it has! It now shows the folder and file in green, and it’s under the “changes to be committed” section. That’s great.

However, we’re still on the “master” branch. You can technically commit and push this change from here, but best practice is to spin up a branch and push it from there. To do that, type “git checkout -b NewBranchName” and hit enter. Then hit “git status” again and we’ll check how things look.

Great, the file is added, and we now see we are on branch “NewBranchName”.

Okay, we have changes staged, and we’re on a branch. Let’s “commit” the staged changes to this branch so they’re all packaged up and ready to be pushed to our server. To do that, type git commit -m “Initial main.tf commit”.

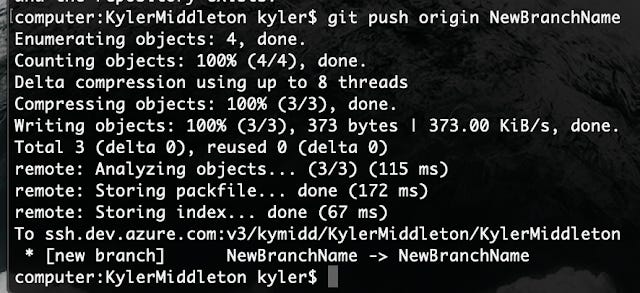

Up until this point, all changes have been only on our machine, but we’re ready to do some cloud DevOps, so let’s push these files to our git server, Azure DevOps. To do that, type “git push origin NewBranchName”. The “NewBranchName” is the name of our local branch. You should see results like the below.

Now we’re done on our computer! Let’s switch to Azure DevOps to check the file.

Azure DevOps Pull Request and Merge

Head to ADO — https://dev.azure.com/ and get into your project. Then click on Repos → Branches. You should see the master branch, as well as your new shiny branch! Others are now able to see your code, but you haven’t opened a PR yet to get them to approve your new code so it can be used. Let’s do that.

Click into the new branch we just built, and you can see Azure DevOps is prompting us for our next step, so let’s take it. Click on “Create a pull request” at the top.

Here you can fill out all sorts of information about your proposed change. DevOps teams (and software engineering teams) do all sorts of cool stuff with git, but we’ll keep it simple.

At the top, ADO wants to know where we want to propose our code get merged into. We want to put it into the master. The title and description are free-form. For this exercise, you know exactly what you’re doing, but imagine if there’s 20 people (or way, way more) working concurrently — descriptions and titles are helpful stuff.

There aren’t any reviewers to approve our change, so we can leave that blank. Work items are automations in ADO that we won’t use (yet!). Click “Create”

Now the PR is opened, woot! Any reviewers added would be notified to review your code. In this case, we’re confident in our change, so let’s click “Complete”. If this was someone else’s change, or if a change requires multiple approvers, we’d only be able to click “Approve”. But our policies are pretty lax in our lab environment, so we can skip and step and jump right to “Complete”. Click it! Your code is now merged into the master branch.

Click on Repos → Files and you’ll be able to see your code in the repo.

Create an Azure DevOps Build Pipeline

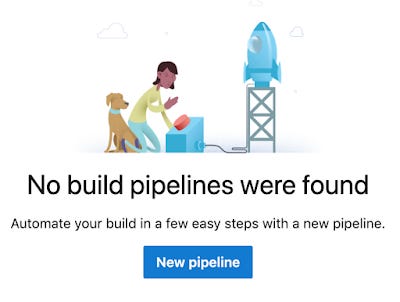

All this cool new code can’t be ingested by a release pipeline until it’s built into an “artifact”, and placed in a staging area. To do this, we need to create a “build” pipeline. Click on Pipelines → Builds and then click on “New Pipeline”.

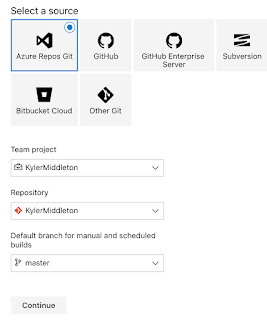

$Msft is pushing for these build pipelines to be built via code, which isn’t terribly intuitive. What’s more intuitive are draggable tiles to build actions. To do that, click on “Use the classic editor” at the bottom of the list.

Everything here looks fine — we want to pull code from the local Azure Repo git, we’re in our project, in the default repo (same name as the project), and we want to grab code from the master branch. Click on “Continue”.

We’re going to create our own build pipeline, so click “Empty job” and we’ll be dropped into a drag-and-drop environment to add actions to our build pipeline.

The pipeline we have pulled up is empty. It shows an “Agent job 1” which means a linux container will spin up and do… nothing. It’s up to us to add some actions to our linux builder. We’re only going to add two actions — a “copy files” action and a “Publish build artifacts” action.

Click the plus sign on “Agent job 1” and find each of these actions in the right column. Make sure the names match, or your configuration for each will be different than what we’ll walk through.

Click on the “Copy files to:” job, and you’ll see some information is being prompted on the right side. Add the source folder of “terraform” Contents can stay as the two asterisks — it’ll copy all files. The target folder should have this string: “$(build.artifactstagingdirectory)terraform”. That’ll copy recursively our repo in the master branch into the root of where our release pipeline runs at.

Also expand the Advanced options at the bottom and check both “Clean Target Folder” and “Overwrite”. This’ll keep the artifact staging directory from getting jumbled with all the artifacts we’ll push in there in the future.

The Publish Artifacts step can stay as it is. At the top, hit “save and queue”, and then “save and queue” again on the pop-up that appears. The build will start running in the background.

Click on “Builds” under pipelines in the left column to jump back to our list of builds. Under the History tab, you’ll see the build. It only takes a few seconds, so it’s probably completed. Hopefully you see the green check box as shown in this snapshot below.

Click on the build if you’d like to see the steps that you configured being run. It’ll look something like this:

Release Pipeline — Let’s run some Terraform!

Now that code is staged as artifacts, it can be consumed by our release pipelines. Let’s get a new pipeline started and give it some terraform commands. In the left column, click on Pipelines → Releases. Then click on the blue button that says “New Pipeline”.

ADO will offer to help us build it, but we’re going to build it ourselves. At the very top, click on “Empty job” to start with an entirely empty release pipeline.

Release pipelines have two phases. The first phase is gathering — it needs to know which files to operate on. We just created a build pipeline which staged some artifacts, so let’s select it. Click on “Add an artifact”.

Under the “Source (build pipeline)” select our build pipeline in the dropdown. My was called the name of my project -CI. Then click on “Add”.

The second phase actually spins up containers, or runs code, or does all sorts of other cool stuff. These jobs are called “tasks” and they exist within stages. For now, let’s click on “1 job, 0 task” — the blue underlined words there.

Let’s name our pipeline — simply click in the name area at the top and type a new name. I called mine “Terraform”. Then save your pipeline by clicking the save button (floppy disk icon) in the top right.

Just as with the build pipelines, click the plus sign on the “Agent job”. Search for terraform in the jobs at the right and find “Terraform Build and Release Tasks”, an entry by Charles Zipp. Click “Get it Free”.

It’ll pop you into a new window to the Azure Marketplace to accept this tool. Click “Get it free”, then follow the workplace to sign up — it’s free, no information or money changes hands, etc. At the end, it’ll pop you back into Azure Devops.

Go find your project again — click “edit” in the top right to jump back into our build mode — then click the plus sign on our agent to add some jobs. This time when you type “Terraform”, you’ll have a few new options!

Add a “terraform installer” step first, then three “terraform cli” steps — 1 more step than I included in the picture below.

Click through the terraform steps. The first step, Terraform Installer, that says “Use Terraform” and a version, defaults to 0.11.11, which is an older version of Terraform. It’s a good idea to update it to the most recent release of terraform. You can find that on HashiCorp’s main terraform page: https://www.terraform.io/

Looks like the most recent version is 0.12.3.

Update the version string on the terraform installer to 0.12.3 and then click on the first terraform validate CLI step, called “terraform validate”. Terraform wouldn’t actually let us do a validate yet — the first step in any terraform deployment is to do an “init”. So let’s change the command to that.

The defaults work for the first few sections. The important one to change is the “backend type” away from local, which would mean store the state on the container, which is destroyed at the end of the step — that’s not a great place to store our .tfstate. Change it to “azurerm” and we’ll get a whole new section to configure — it’ll store the .tfstate file in an Azure Cloud storage blob, where it can be referenced later.

For the “Configuration Directory”, click on the three dots and expand the folders — what you’re viewing is the staged artifacts. You should see the “drop” folder where artifacts are built and stored.

There’s lots to configure in the AzureRM Backend Configuration, but we’ll work through it together. The first step is the Backend Azure Subscription — this is the Service Connection we created in part 1. if you don’t see anything here, try to hit the circle to the right of the drop-down. Select your Azure Cloud subscription.

Check the box to build a backend if it doesn’t exist — it doesn’t, so we’ll need ADO to build this storage blob for us.

Use anything for the resource name — I used “azurerm_remote_storage”. Set the other values as shown below except for the “Storage Account Name”. That must be globally unique. I’d recommend throwing in some numbers or using your own name — remember that string must be all lower-case, no hyphens or underscores, 3–24 characters.

Now click to the next Terraform CLI step — it still shows “Terraform Validate”, and that’s where we’re going to leave it. The only option that needs to change here is the Configuration Directory — use the same value you used in the “terraform init” step.

Click on the third step and let’s update it to “Terraform plan”. It’s a good idea to have this step in your pipelines before any “terraform apply” so you can make sure everything looks good before continuing.

Make sure to set the same configuration directory as the last two steps, and to set your Azure cloud subscription again. Once complete, hit “save” at the top.

Create Release!

We’re ready to create our first release. This will run our release pipeline and test our steps. Expect a few things to be broken at first — that’s normal for anything new! You can work through the descriptive error messages and fix it all.

Click “Create Release” in the top right — the rocket ship, then click “Create” and our release will start running in the background.

At the top, click on “Release-1” to jump to the release page, where we can view the results of our testing and see the response to our commands.

Hover over “Stage 1” and click on “Logs” to view all the steps and watch them go through.

Once it’s complete, you’ll see status. Here’s what it looks like if everything went well. If you see any errors, click on the step to see the logs.

Let’s click on the “terraform plan” step so we can see terraform’s output:

That looks about like what we expected, so let’s roll it out. Let’s go back into our release pipeline and click edit, and add one more “terraform CLI” step to do a “terraform apply”.

Make sure to set the same configuration directory as the other steps, and to set your subscription again.

Hit save, then re-run your release (remember the rocket in the top right?)

This time our release continues right on past the “terraform plan” step right to a “terraform apply” step, and builds us some resources.

Here’s the output of the build command.

BOOM

What’s Next?

Now that all our machinery is built and confirmed working, we can start iterating on our terraform codebase. We can add some modules, define subnets, VPNs, servers, storage, security policies, and on and on.

Each time you commit code, merge it into master via a PR (or commit directly to master), run a build, then run your release, and your resources will be built via Terraform in a cloud environment.

There’s lots more cool stuff to do — we can build in some safety checks where a “terraform plan” is executed, then waits for permission to continue to a “terraform apply”, we can do automatic terraform builds and validations on PR opening, we can give you 3 magic wishes… Okay, maybe not that last one, but lots of cool stuff is coming. Check out future blog posts for more cool stuff.

EDIT: And it’s been posted, check it out here!

And if you’d like to check out how some other CI/CDs work, check out other blogs I’ve written here:

Good luck out there!

kyler