🔥Building a Slack Bot with AI Capabilities - From Scratch! Part 2: AWS Bedrock and Python🔥

aka, "oh hey there world-eating AI, can you do a small task for me, as a favor?"

This blog series focuses on presenting complex DevOps projects as simple and approachable via plain language and lots of pictures. You can do it!

These articles are supported by readers, please consider subscribing to support me writing more of these articles <3 :)

This article is part of a series of articles, because 1 article would be absolutely massive.

Part 1: Covers how to build a slack bot in websocket mode and connect to it with python

Part 2 (this article): How to deploy an AWS Bedrock AI resource and connect to it to ask a request locally from your terminal with python3

Part 4: How to convert your local script to an event-driven serverless, cloud-based app in AWS Lambda

Part 7: Streaming token responses from AWS Bedrock to your AI Slack bot using converse_stream()

Part 8: ReRanking knowledge base responses to improve AI model response efficacy

Part 9: Adding a Lambda Receiver tier to reduce cost and improve Slack response time

Hey all!

During the last article, we went over how to build a slack app that can listen for tags in shared rooms, or for DMs from your users. We connected it with websocket mode, which is great for testing, but less so for enterprise consumption.

However, we didn’t see it really *do* anything - we got a json packaged “ok” that our slack app is connected, but we’re not posting anything back to slack. That’ll change in this article.

We’re going to get our AI ready inside AWS BedRock, where we’ll deploy Anthropic’s Claude v3.5 Sonnet. Then we’ll update our python script to connect to it and serve queries.

THEN we’ll stitch the two together so you can locally run your python script and it’ll receive queries from slack, then relay those requests to Bedrock, then get responses and post them back to Slack. This will create a fully functional Bedrock AI chat bot.

That’s not going to quite get to enterprise quality - running any bridge script forever on a single machine is going to break a lot. Like, your machine will hibernate or reboot sometimes, right? So in the next article we’ll talk about how we can embed this script in an AWS Lambda that’ll receive webhooks from the slack app, and do all the stitching work of connecting it to Bedrock. That’ll run forever in the cloud, and never require us to touch it again, lazy (over?-)engineering at its finest!.

With no further ado, lets dive in!

(Lack of?) AWS Bedrock Resource

In AzureAI, you select an AI model, and you do a Deployment of that model onto a particular resource. That resource runs the model and has ingress addresses specific to the resource, and the Deployment of the model can match it to Content Filters and other linked resources.

So I was surprised to find that AWS has nothing of the sort. Almost every AI model supports what they’re calling “serverless” mode, which means that it’s available on-demand, without doing any type of Deployment or anything.

So the resources that we’ll deploy to actually run the thing are… none. Well, we have to get it approved in the console, which is something. And we have to create a Guardrail, which we’ll do with terraform.

Let’s go build them.

The very first thing I had to do was head over to Oregon us-west-2 region. I tested Claude v3.5 Sonnet out in us-east-1, and I was sure my code was bad because even though my examples appeared to match AWS’s example code, it wouldn’t work. Nope, wrong region.

Even our AWS TAM says we should use us-west-2, it’s faster and has more models available

Find the Amazon Bedrock service. There’s a lot going on with AI in AWS, and that’ll be true for many years (maybe forever…), and there’s lots of confusing directions to head over to Sagemaker, or “AI Operations”. Trust me when I say, this is where we want to be.

Allllll the way down in the bottom right, you’ll find the “Model access” button. Before we can use any of the AI models, we have to accept their EULAs and be granted access (which is an automated process that takes ~1 minute).

Scroll down a bit to find the Anthropic section. We’re going to use the Claude 3.5 Sonnet v2 model in this guide, but there are TONS of models from all different providers. I wish there was more information about how fast they are, or what they’re best at, but there is a very easy way for you to find that out yourself - the Playground!

That’s out of scope of this article series, but over on the left you can find “Playground” section with “Chat / Text” and you can go test out models to see how they do, or you can run up to 3 models concurrently to see how fast they are, and the quality of the information they return.

On the model you’re looking for, click on “Available to request” and then on “Request model access”.

You’ll be taken to yet another page to request models, and you can check multiple models here if you’d like, to request them all at once. It doesn’t take any longer to get multiple models, even if they’re from different companies.

Then click on Next in the far bottom right (you’ll have to scroll down).

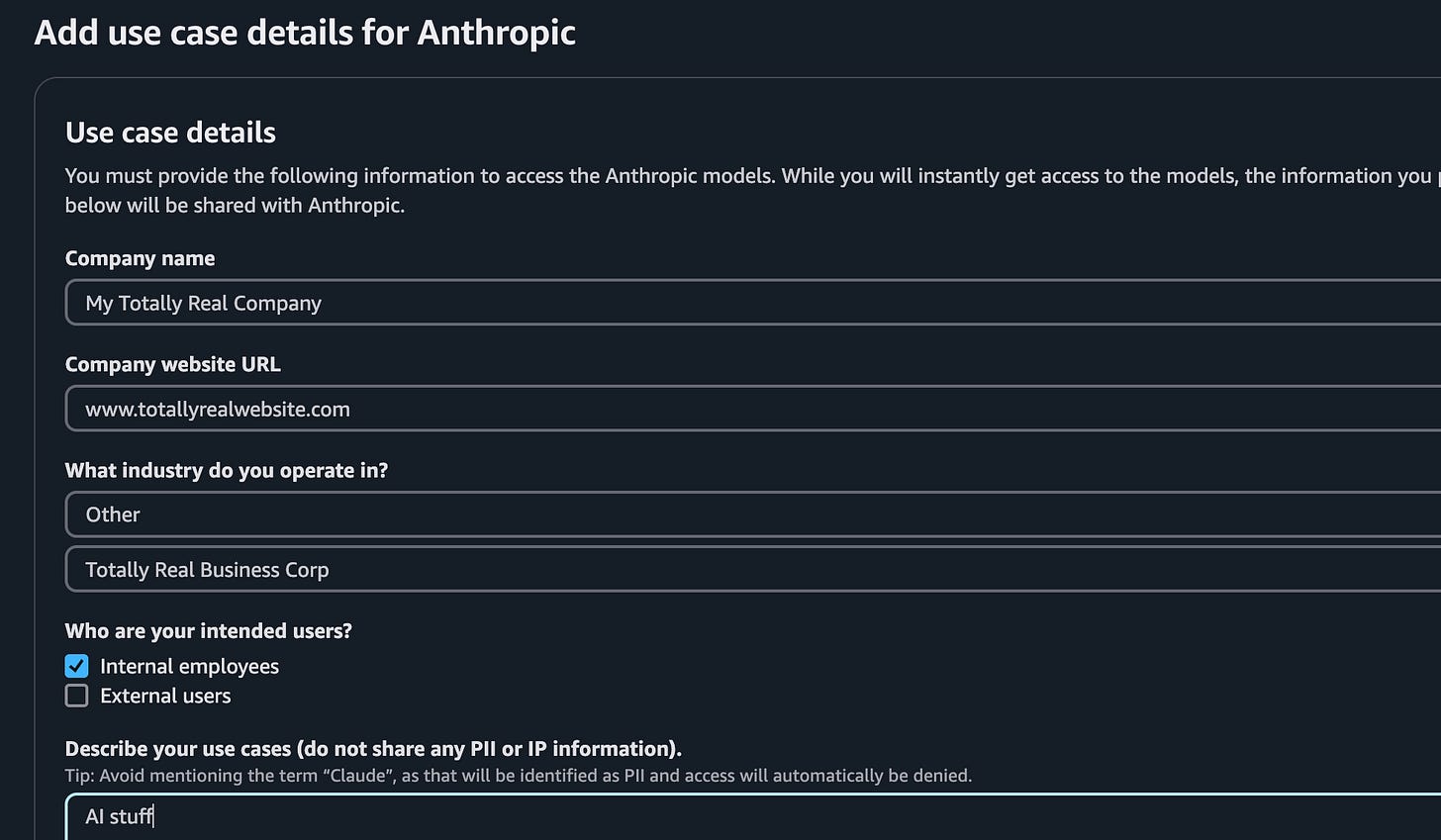

Anthropic interestingly makes you fill out a form with what you’re doing with the model. I have no idea why. For my real use case I put real data, but here I put some fun stuff to make you laugh. When it’s all filled out, hit Next in the bottom right.

In a very “no part of this makes sense”, read the note with the “Describe your use case”. A PII filter will catch if you say the word “Claude” (you know, the name of the model you’re requesting) and will deny you automatically. I have no idea why ¯\_(ツ)_/¯

On the next page, hit Submit.

For a few minutes you’ll see that your access to this model is “In Progress”.

After a minute or two, it’ll swap to “Access granted”.

Let’s go talk to our AI!

Simple Local AI Request

We have big plans here - we’re going to have our slack bot extract conversations, and feed it over to our AI, all automatic, serverless, and idempotent. That’s a ton of complexity! And complexity is solved by breaking that complexity down into really simple building blocks.

So let’s start simple - let’s send a request to our AI manually, from our computer, using a local script, authenticated as a user with broad permissions.

First, let’s make sure we have Python 3.12 installed.

These instructions are for mac/linux. If you’re on windows, your installation and virtual environment steps will be different.

> python3.12 --version Python 3.12.8Sweet. Most development happens within virtual environments so we don’t pollute all our other projects with specific versions and packages they probably don’t need (or are outright not compatible with), so let’s create one.

Navigate to where you’ll be working on your python code at from your terminal, and run this command to create a virtual environment in that directory:

> python3.12 -m venv .Then activate the virtual env

> source ./bin/activateThen you can install the dependencies we’re going to require:

> python3 -m pip install --upgrade pip # Upgrade pip in the venv > python3 -m pip install boto3 # For talking to AWS > python3 -m pip install slack_bolt # For our slack botLet’s switch to our IDE to write some python. We’re going to be walking through this file.

Let’s start, as in most projects, with imports. We need boto3 to interact with AWS services, and we’ll need to import json to decode some complex json that we’ll both construct and receive back from AWS Bedrock.

| ### | |

| # Imports | |

| ### | |

| # Global | |

| import boto3 | |

| import json |

Then we’ll set some AWS-specific stuff, like the region we’ll be running in. You could totally construct this if you want, but hard-coding it works for our purposes today.

| ### | |

| # AWS Stuff | |

| ### | |

| # Specify the AWS region | |

| region_name = "us-west-2" |

Now let’s set some global vars that we’ll reference throughout this script. These are the major levers and knobs we’ll touch to make our bot work differently.

On line 6, the exact name of the model we’re going to call. This very long string comes from the AWS Bedrock page for this model. The date on this model is only a few weeks ago, so there are surely going to be new versions of the model that we’ll need to update to use.

On line 7, the version of the Anthropic (the makers of the Claude Sonnet AI model we’re going to use) toolkit.

On line 8, the temperature we’ll use for requests from the AI. The range is 0.0 — 10.0, with 10.0 being a very creative model that makes up anything it doesn’t know. 0.0 means no creativity whatsoever, which barely works. For a technical model, you’ll generally set this to a low value.

On line 10, we’ll set some model guidance. This is injected on each request, and serves to ground the model with foundational rules for how it should operate. You can tell it stuff that’s important, like the current date, the company you work for, any lingo or rules for speech that you find important.

This is very much a “try and see” type of config, even for large companies building models. The AWS Bedrock Playground is very useful here, you can measure model performance in your browser with different instructions to see their quality, shape, and speed

| ### | |

| # Constants | |

| ### | |

| # Replace with your desired model ID | |

| model_id = 'anthropic.claude-3-5-sonnet-20241022-v2:0' | |

| anthropic_version = "bedrock-2023-05-31" | |

| temperature = 0.2 | |

| model_guidance = """Assistant is a large language model trained to provide the best possible experience for developers and operations teams. | |

| Assistant is designed to provide accurate and helpful responses to a wide range of questions. | |

| Assistant answers should be short and to the point. | |

| Assistant uses Markdown formatting. When using Markdown, Assistant always follows best practices for clarity and consistency. | |

| Assistant always uses a single space after hash symbols for headers (e.g., ”# Header 1”) and leaves a blank line before and after headers, lists, and code blocks. | |

| For emphasis, Assistant uses asterisks or underscores consistently (e.g., italic or bold). | |

| When creating lists, Assistant aligns items properly and uses a single space after the list marker. For nested bullets in bullet point lists, Assistant uses two spaces before the asterisk (*) or hyphen (-) for each level of nesting. | |

| For nested bullets in numbered lists, Assistant uses three spaces before the number and period (e.g., “1.”) for each level of nesting. | |

| """ |

Next up we create a bedrock client that we’ll use to interact with AWS services like Bedrock. On line 2-4 we tell it to use the bedrock runtime, and to operate in the Us-west-2 region.

On line 8, we set a static prompt to ask the AI, “write a short story about a cat”. You can put literally anything here. And of course, editing your python file to run it again isn’t a very intuitive experience for you or your developers, but it’ll help prove out this simple building block, so we’ll leave it.

| # Create a Bedrock client | |

| bedrock_client = boto3.client( | |

| 'bedrock-runtime', | |

| region_name=region_name | |

| ) | |

| # Static prompt | |

| prompt = "Write a short story about a cat" |

Let’s send our request and get a response! We reference the bedrock client that we just built to invoke our model, passing a lot of the variables we set above to this request.

On line 2, we tell it which model to send the request to.

On line 3 and 4, we have commented out the Guardrail config. We’ll add that once we confirm this is working.

On line 5, we construct the json package for the request, with all the inner (Claude-facing stuff), like the anthropic version (line 7), the max_tokens of 1024, the temperate, and the model guidance.

On line 11, we start doing something cool. We’re building out a “conversation” of messages. This is critical for our Slack Bot to understand a whole conversation and respond to all of it coherently, but we mostly don’t use it for this simple example.

Here, we just specify that we are a user (line 13), and we are sending a text request (line 16) with content of whatever (line 17) that is the “tell us a cat story” content.

| response = bedrock_client.invoke_model( | |

| modelId=model_id, | |

| #guardrailIdentifier="xxxxxxxxxxxx", | |

| #guardrailVersion = "DRAFT", | |

| body=json.dumps( | |

| { | |

| "anthropic_version": anthropic_version, | |

| "max_tokens": 1024, | |

| "temperature": temperature, | |

| "system": model_guidance, | |

| "messages": [ | |

| { | |

| "role": "user", | |

| "content": [ | |

| { | |

| "type": "text", | |

| "text": prompt, | |

| } | |

| ] | |

| } | |

| ], | |

| } | |

| ), | |

| ) |

Then to finish the script we have some response massaging. The response comes in a json package, with the body encoded, so on line 2 we have to decode it.

Then on line 3 we load the response body as json.

Then on line 4, we filter through the response to get the most recent response from the AI bot.

Then on line 5, print it.

| # Read the response body | |

| response_body = response['body'].read().decode('utf-8') | |

| response_json = json.loads(response_body) | |

| response_text = response_json.get("content", [{}])[0].get("text", "") | |

| print(response_text) |

It all looks good to me, let’s run it!

Let’s run it! Well that’s a lovely story. If you get a giant error message, check if you’ve exported AWS authentication information into your terminal.

> python3.12 bedrock_claude_single_request.py # The Curious Cat A small orange tabby named Whiskers sat perched on the windowsill, watching raindrops race down the glass. His tail twitched with excitement as a bird landed on the nearby branch. Determined to investigate, he pressed his pink nose against the cold window. The bird, unaware of its feline audience, hopped closer. Whiskers' whiskers quivered with anticipation. *Just one more step*, he thought. But as he shifted his weight, he slipped off the sill with an undignified "meow," landing on the carpet below. The bird flew away, and Whiskers pretended he meant to jump down all along. After all, it was nearly dinner time anyway.Guardrails to Keep Those Tokens Clean

Sweet, we have an AI! That’s awesome. Since we’re building this for an enterprise, we also needs some guardrails around questions and answers. Azure calls this a Content Filter, and AWS calls it, well, Guardrails.

As with most AWS resources, this one can be built with Terraform. Let’s go through it line by line.

On line 1, we declare this is a bedrock_guardrail resource named guardrail. With most AWS resources that are used together, you tie them together with a reference (that’s how AzureAI works!) but not in AWS. Instead, we’ll reference this in python (which is kind of an odd architectural choice by AWS in my opinion).

On line 2, we assign a provider. This isn’t required, but I’m building resources in several regions so we need to tell the guardrail to build in the same region as our AI resource (Us-west-2).

On line 3, we assign a name. On line 4, we set a message for if the content filter blocked an inbound request, from the User —> AI. Users say all sorts of silly stuff, so you can block it before it gets to your AI at all.

On line 5, you can set an outbound filter message, from the AI —> user. This is particularly useful, because an AI can appear to represent an official business position and that can get you in trouble - we’ll talk some examples below.

| resource "aws_bedrock_guardrail" "guardrail" { | |

| provider = aws.west2 | |

| name = "AiBotGuardrail" | |

| blocked_input_messaging = "Your input has been blocked by our content filter. Please try again. If this is an error, discuss with the DevOps team." | |

| blocked_outputs_messaging = "The output generated by our system has been blocked by our content filter. Please try again. If this is an error, discuss with the DevOps team." | |

| description = "DevOpsBot Guardrail" |

Within the same guardrail resource, we can set content filter policies and strengths. I’m leaving the default of MEDIUM for each. More info on what those levels mean (low, medium, high) is here. I haven’t found an inclusive list of filters available, and I doubt it stays the same for long - we’ll see AWS continue to add different types of filters for us to use.

| content_policy_config { | |

| filters_config { | |

| input_strength = "MEDIUM" | |

| output_strength = "MEDIUM" | |

| type = "INSULTS" | |

| } | |

| filters_config { | |

| input_strength = "MEDIUM" | |

| output_strength = "MEDIUM" | |

| type = "MISCONDUCT" | |

| } | |

| filters_config { | |

| input_strength = "MEDIUM" | |

| output_strength = "MEDIUM" | |

| type = "SEXUAL" | |

| } | |

| filters_config { | |

| input_strength = "MEDIUM" | |

| output_strength = "MEDIUM" | |

| type = "VIOLENCE" | |

| } | |

| filters_config { | |

| input_strength = "NONE" | |

| output_strength = "NONE" | |

| type = "PROMPT_ATTACK" | |

| } | |

| filters_config { | |

| input_strength = "MEDIUM" | |

| output_strength = "MEDIUM" | |

| type = "HATE" | |

| } | |

| } |

There’s a few sections here, and all are fascinating. All of this belongs to the same Guardrail resource configuration.

On line 1, we have a section around sensitive information config. This permits you to anonymize (replace with a static “filtered” string) or block any request that runs afoul of this filter. The list appears to represent all the different types of PII, or Personally Identifiable Information. The terraform example shared for this resource includes a filter that BLOCKs on NAME, and so even when I requested innocuous things, like “tell me a story about a dog”, I’d get blocked, because the AI would name the dog, and this filter would remove it.

PII filtering is powerful, but don’t copy the default example from Terraform - it’ll break a ton of use cases

On line 8, you can define specific topics that aren’t permitted to be discussed by this bot. Financial advice is provided as an example, and it’s one you should use. Your bot should never advise anyone to buy/sell any asset. If it has any training data, it could be construed as insider trading, and even if it doesn’t, it looks like insider trading. Just stay away from that. The example is interestingly a human language question and definition, which is likely used to build a specific computationally efficient filter on the back-end.

On line 17, there is a word list policy, which can be used to block profanity, or really any other word you deem objectionable. If you want to block folks from discussing the Packers (American Football), you 100% can with this config.

| sensitive_information_policy_config { | |

| pii_entities_config { | |

| action = "ANONYMIZE" | |

| type = "US_SOCIAL_SECURITY_NUMBER" | |

| } | |

| } | |

| topic_policy_config { | |

| topics_config { | |

| name = "investment_topic" | |

| examples = ["Where should I invest my money ?"] | |

| type = "DENY" | |

| definition = "Investment advice refers to inquiries, guidance, or recommendations regarding the management or allocation of funds or assets with the goal of generating returns." | |

| } | |

| } | |

| word_policy_config { | |

| managed_word_lists_config { | |

| type = "PROFANITY" | |

| } | |

| } |

Okay, our guardrail is ready. Let’s update our python script to reference it.

Adding Some GuardRails (It’s Easier Than You Think)

It’s incredibly easy to add a guardrail to our API calls, but we’ll need some information:

The Guardrail ID

The Guardrail version we want to reference - the working version you make changes to by default is called DRAFT, and then you can publish particular versions for use. We’ll just reference DRAFT for ease

Lets go find our Guardrail in Bedrock - we need a short alphanumeric string called the “ID”. Copy that down.

Now let’s switch back to our simple python request code, and lets add in the Guardrail config to the call. Uncomment line 4 and 5 to add the guardrail information. make sure to update line 4 to the actual ID of the guardrail you created.

| # Create a request to the model | |

| response = bedrock_client.invoke_model( | |

| modelId=model_id, | |

| guardrailIdentifier="xxxxxxxxxxxx", | |

| guardrailVersion = "DRAFT", | |

| body=json.dumps( |

Lets update our static request to be one which clearly violates our guardrail rules - asking what stock we should buy. We specifically told our guardrail to deny requests like this.

| # Static prompt | |

| prompt = "What stock should I buy today?" |

Lets run it and see what happens.

> python3.12 bedrock_claude_single_request.py Your input has been blocked by our content filter. Please try again. If this is an error, discuss with the DevOps team.Perfect - we were denied, just like we should be. Lets revert our prompt back to a cute story request, this time about a puppy, to make sure guardrail isn’t blocking valid prompts.

| # Static prompt | |

| prompt = "Write a short story about a puppy" |

And run it again:

> python3.12 bedrock_claude_single_request.py # The Curious Puppy Max, a golden retriever puppy, bounced through his backyard on a sunny morning. His floppy ears perked up at every new sound, and his tail wagged with endless enthusiasm. He discovered a colorful butterfly and chased it around the garden, tripping over his own paws. The butterfly landed on his nose, making him cross-eyed for a moment before it fluttered away. Tired from his adventure, Max curled up under a shady tree, dreaming of more butterflies and belly rubs, his tiny snores bringing smiles to his family watching from the porch.Well that’s adorable, but more than that, it worked. Our guardrail is now in place.

Notably, I can’t find a way to require requests to use a guardrail to be processed. That’s much easier on the AzureAI side than here. If you know a way, please comment and teach me!

But What About a Conversation?

That works for a single request, which is great, but what about when we need to provide the AI further guidance? After all, humans are conversational creatures, and they might want to ask clarifying questions.

Here’s how we don’t want this to go:

Human: Do cats come in brown?

AI: They do

Human: What about dogs?

AI: ??

Clearly, we need the AI to get the context of a request, not just the most recent request. Thankfully, the APIs for these AIs support that (woot).

Lets switch over to the python/bedrock_claude_conversation.py file for a working example of how we can pass a conversation to Claude.

The format of the file is very similar, except instead of sending just a single message in our messages array inside the response, we send several, in order. This is intended to represent "a “conversation” between a human and the AI model.

Note that requests from the human are marked “role: user” (line 11, line 29), and responses from the AI model are marked “role: assistant”, line 20.

The conversation is intended to read like this:

Human: Write a short story about a cat

AI: There once was a cat named whiskers…

Human: Now make it about a dog

AI: (let’s find out)

| response = bedrock_client.invoke_model( | |

| modelId=model_id, | |

| body=json.dumps( | |

| { | |

| "anthropic_version": anthropic_version, | |

| "max_tokens": 1024, | |

| "temperature": temperature, | |

| "system": model_guidance, | |

| "messages": [ | |

| { | |

| "role": "user", | |

| "content": [ | |

| { | |

| "type": "text", | |

| "text": "Write a short story about a cat", | |

| } | |

| ] | |

| }, | |

| { | |

| "role": "assistant", | |

| "content": [ | |

| { | |

| "type": "text", | |

| "text": "There once was a cat named Whiskers. Whiskers was a very curious cat who loved to explore the world around him. One day, Whiskers found a mysterious door in the garden. He pushed it open with his nose and found himself in a magical world full of talking animals and enchanted forests. Whiskers had many adventures in this new world and made many new friends. He learned that sometimes, the most magical things can be found in the most unexpected places.", | |

| } | |

| ] | |

| }, | |

| { | |

| "role": "user", | |

| "content": [ | |

| { | |

| "type": "text", | |

| "text": "Now make it about a dog", | |

| } | |

| ] | |

| }, | |

| ], | |

| } | |

| ), | |

| ) |

Here’s the results. Note that the AI model understood the context of the request, because we included it. Even though the prompt was specifically “Now make it about a dog”, the model understood that we had just requested it tell us a story, and we asked for one about a dog.

This is a silly example, but in real life it permits exploratory and exacting questions and a conversant tone between interrogator and interrogant.

> python3 bedrock_claude_conversation.py There once was a dog named Scout. Scout was a playful golden retriever who lived for adventure and belly rubs. One rainy afternoon, Scout discovered a rainbow in his backyard. Following his instincts, he chased it all the way to the park, where he found not a pot of gold, but a group of puppies having the time of their lives in a puddle. Scout joined in their splashing and playing, making new friends and getting thoroughly muddy. He learned that sometimes the best treasures aren't things you can keep, but the joy of making new friends and having fun.It’s interesting to note here, the “role”s are either “user” or “model” - there’s no indication of “speaker1” vs “speaker2”. So the model will basically assume that everyone who isn’t the AI model is a single speaker.

That’s probably not a huge deal, but it’s a limitation of the state of the art today.

If you know a way to disambiguate different speakers in these API requests, please comment to let me know!

Summary

This is getting long, so lets break this into two parts - that’ll make this a 4-part series, a personal best for me.

In this article, we talked about how AWS Bedrock works, and went and get access to a foundational model from Anthropic called Claude v3.5 Sonnet. We sent a simple, single request to it via Python, and got a response.

We built a Guardrail resource using terraform, and talked about all the ways we can secure our AI inputs and outputs via native AWS tooling, and then connected our API request to the Guardrail resource and tested it with sample inputs.

Then we talked about how we can pass an entire conversation to the AI model so it can understand and respond to an entire conversation’s worth of context.

In the next article we’re going to stitch article 1 (slack bot) and article 2 (AI via Python) together with our python script so our users in Slack can send requests to our AI model and get responses in Slack. In article 4, we’ll deploy the whole thing to AWS Lambda so it’s serverless, self-healing, and we don’t have to keep our cool python script running on our computer.

Hope this has been a fun ride so far. Lots of cool stuff coming.

Good luck out there!

kyler