🔥Let’s Do DevOps: Writing a New Terraform /Tofu AzureRm Data Source — All Steps! 🚀

This blog series focuses on presenting complex DevOps projects as simple and approachable via plain language and lots of pictures. You can…

This blog series focuses on presenting complex DevOps projects as simple and approachable via plain language and lots of pictures. You can do it!

Note, I’ve also presented this content as a video at this link if that’s how you’d prefer to learn :) ❤

Hey ya’ll!

I’ve spent a ton of time writing terraform code itself, as well as the pipelines that wrap around it to automate tasks. I like to think I’m pretty good at it. But I’ve always wanted to move one ladder wrung up the tool chain, and write some code for terraform itself.

Note: I use Terraform and Tofu interchangeably through this doc. I disagree with Hashi converting Terraform to a source-available license and away from truly open source.

Rather than the relatively intuitive HCL language that Terraform/Tofu use, the internals of Terraform providers are written in Go/Golang, a relatively low-level language (at least compared to HCL) that spun out of Google.

It’s a compiled language, which means it’s quite a bit more difficult to troubleshoot and work with than any language I’ve used previously.

So I had a goal! And that goal sat idle for a few years, because all the things I wanted to do in AWS were already pretty well documented and achievable. However, then I switched to Azure. $MSFT hasn’t embraced Terraform/Tofu nearly as much as AWS has, instead propping up their (clearly quite a bit worse, sorry $MSFT) ARM and Bicep languages.

Which is a lot of words to say, I found a lot of problems… er, opportunities over on the Azure side of the house! Things weren’t broken (well, some things were), but more frequently what I wanted to do wasn’t possible in the current iteration of the provider. For instance, data sources exist for a single iteration of a resource, but not for finding several resources that match search parameters.

And so when an internal project at my company required finding any number of IP Group resources in Azure (that were built in a different terraform layer/state)

If you want to jump to the conclusion, here’s the PR that was finally merged, and here is the resource webpage for the ip_groups data source. It released in AzureRM provider version v3.89.0.

Here are, as well as I can remember, everything I did to contribute to the provider, and to finally get my contributions accepted and rolled out so all of Terraform/Tofu’s userbase can benefit from this code.

Confirm It’s Not Available

First of all, let’s confirm we have to do any work at all. The AzureRM Provider website docs are excellent, and we can see there is a data source for a single IP group here, but it’s only capable of finding a single exact-match IP group in a resource group.

| data "azurerm_ip_group" "example" { | |

| name = "example1-ipgroup" | |

| resource_group_name = "example-rg" | |

| } | |

| output "cidrs" { | |

| value = data.azurerm_ip_group.example.cidrs | |

| } |

That’s great, but I need to find n of IP Groups. There is an architecturally-driven reason for this. IP Groups can contain up to 5k CIDRs — that’s a lot of IPs! But I want to list out all the IPs for an entire country — actually, several, and there are way more than 5k CIDRs to represent either the US or India, both of which I want to grab. Because these IP allocations constantly change, there may be n IP Groups in the future. Right now we’re at 22 to represent the US.

So really I’d like something like this, to match n IP Groups based on that name substring matching resources, and then be able to pull out their IDs or names or whatever as a list.

| data "azurerm_ip_groups" "example" { | |

| name = "existing" # <-- This is a substring match, not an exact match | |

| resource_group_name = "existing" | |

| } | |

| output "ids" { | |

| value = data.azurerm_ip_groups.example.ids | |

| } | |

| output "names" { | |

| value = data.azurerm_ip_groups.example.names | |

| } |

However, that doesn’t exist. I wonder how hard it can be to add it? Let’s go find out.

Find the Code

It’s oddly hard to find the actually code that builds resources and data files. It’d be great if Hashi/the Tofu group would add something like a link from those very accessible web pages to the actual code that builds the resource. Instead, let’s go spelunking.

Eventually I found the data source file for a single ip_group in the path:

internal/services/network/ip_group_data_source.goThe source is here, and interestingly has absolutely no comments. Why aren’t there comments? Maybe everyone who works on this code is an expert at Go? I certainly am not, so I extensively used Github Copilot to explain things to me.

Let’s walk through what you’ll find in these files.

Break Down an Existing Data Source

First, there is a package name, which is a grouping of code. This grouping indicates to the Go language which code belongs together.

| package network |

Then there’s the imports. These are code libraries used by the code in this file. There are some very cool IDE support (at least in Visual Studio Code) that automatically updates this list as you write code. This isn’t a new concept to me, but it isn’t something I’ve worked with extensively — usually I write my own tools entirely, because of the simplicity of what I’m doing. This is a more complex use case, so it makes sense that it’d be a more complex implementation.

| import ( | |

| "fmt" | |

| "time" | |

| "github.com/hashicorp/go-azure-helpers/resourcemanager/commonschema" | |

| "github.com/hashicorp/go-azure-helpers/resourcemanager/location" | |

| "github.com/hashicorp/terraform-provider-azurerm/internal/clients" | |

| "github.com/hashicorp/terraform-provider-azurerm/internal/services/network/parse" | |

| "github.com/hashicorp/terraform-provider-azurerm/internal/tags" | |

| "github.com/hashicorp/terraform-provider-azurerm/internal/tf/pluginsdk" | |

| "github.com/hashicorp/terraform-provider-azurerm/internal/timeouts" | |

| "github.com/hashicorp/terraform-provider-azurerm/utils" | |

| ) |

Then there’s a primary function. This is a term I made up — it’s the called function when you run code that says azurerm_ip_group and pass it info. This code receives that call, validates that the input data matches what is expected, and calls a child function to process all the data — you can see on line 3 that a child function called dataSourceIpGroupRead, to which it passes all data. Note that name is Required: true, which is the trigger for when you call a resource or data source, and it spits out an error that this resource requires xxx field be populated and you forgot. If you don’t pass in these fields, it’ll error and stop.

Also see cidrs on line 19, which is marked Computed: true, which means it isn’t possible for someone to call this from Terraform with that field populated, rather it’s populated by the provider’s scripting and data calls. If someone tries to send data to that field, the provider exits out with an error.

There’s other interesting stuff here that I won’t dive into fully. Ask copilot for more info!

| func dataSourceIpGroup() *pluginsdk.Resource { | |

| return &pluginsdk.Resource{ | |

| Read: dataSourceIpGroupRead, | |

| Timeouts: &pluginsdk.ResourceTimeout{ | |

| Read: pluginsdk.DefaultTimeout(5 * time.Minute), | |

| }, | |

| Schema: map[string]*pluginsdk.Schema{ | |

| "name": { | |

| Type: pluginsdk.TypeString, | |

| Required: true, | |

| }, | |

| "resource_group_name": commonschema.ResourceGroupNameForDataSource(), | |

| "location": commonschema.LocationComputed(), | |

| "cidrs": { | |

| Type: pluginsdk.TypeSet, | |

| Computed: true, | |

| Elem: &pluginsdk.Schema{Type: pluginsdk.TypeString}, | |

| Set: pluginsdk.HashString, | |

| }, | |

| "tags": tags.SchemaDataSource(), | |

| }, | |

| } | |

| } |

Then there’s the child function that does the work of looking up the information, erroring if it can’t be found, sorting out the info, etc. This is only a small snippet of what the ip_group data source code does.

| func dataSourceIpGroupRead(d *pluginsdk.ResourceData, meta interface{}) error { | |

| client := meta.(*clients.Client).Network.IPGroupsClient | |

| subscriptionId := meta.(*clients.Client).Account.SubscriptionId | |

| ctx, cancel := timeouts.ForRead(meta.(*clients.Client).StopContext, d) | |

| defer cancel() | |

| // Lots more | |

| } |

Suffice to say, I’m in over my head. Awesome! (For real, it’s fun to learn new stuff). There is, however, a lot going for me:

This is a data source, not a resource. Those are lots simpler, and I don’t have to handle lifecycle events, like checking if a resource exists, which fields trigger a rebuild, etc. I just have to find and output data.

There is an existing single data source, with tests, that works. Maybe I can just add an

sso all the calls sayip_groupsinstead! (Read: it was way more than that)

Well, let’s start!

Clone the Repo and Start Fiddling

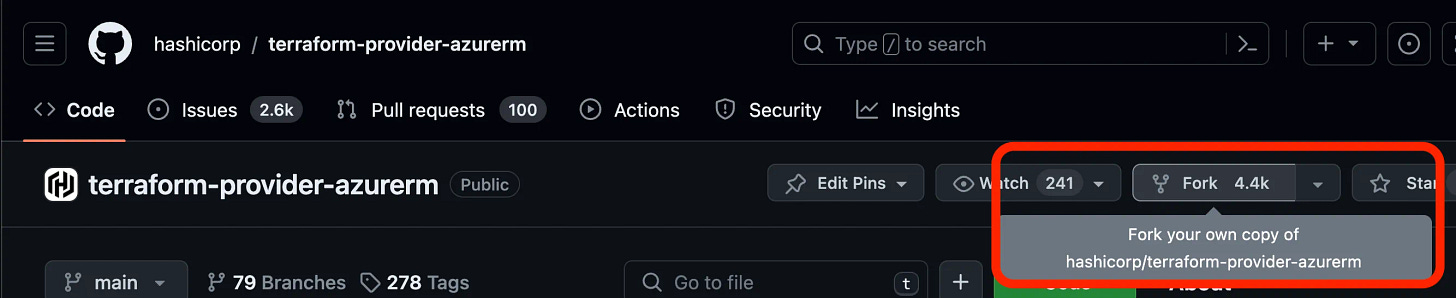

The repo for the provider is here, but I don’t have the ability to create branches and stuff directly there. For most open source projects, you instead fork the repo to a repo you own. That way you have write access, and can mess around and test stuff on your own until you’re ready. When you are finally ready, you can do a PR from your fork to the real target repo just like normal.

So first, let’s create a fork!

Click that, and we’re done! Well, at least we’re done with creating a fork. Clone that repo to your computer.

I have a VSC (Visual Studio Code) workspace with several hundred repos all open within it. That works for me. But that doesn’t work for Go. Go requires you open the repo folder on its own, in its own VSC instance. So go do that!

Go requires you open the repo folder on its own, in its own VSC instance

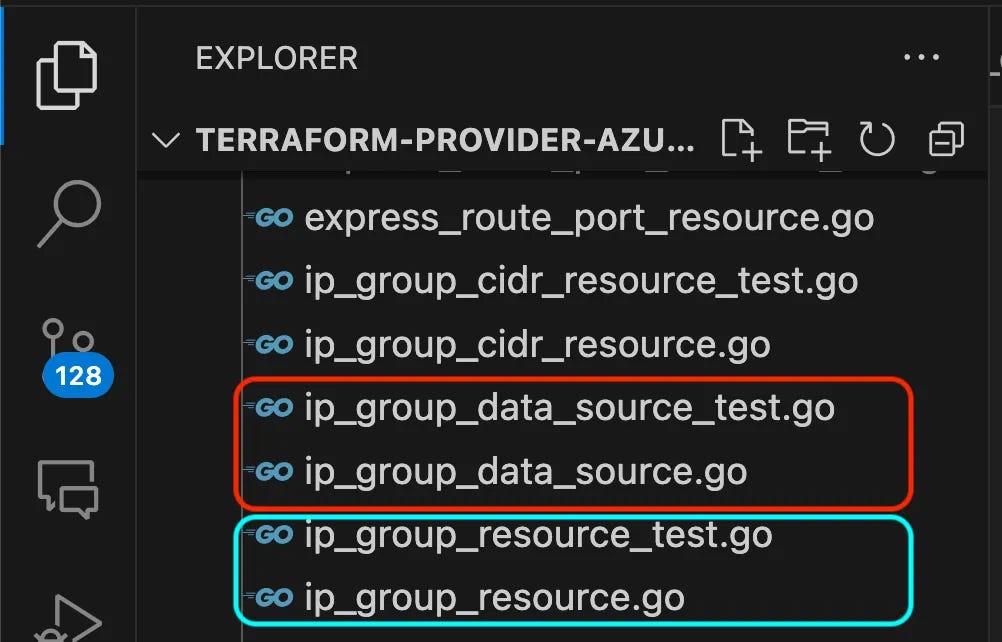

Once we find our way to the code, we can see that there are actually several files that we need to pay attention to. First, in red 🔴 the IP Group data source itself (ip_group_data_source.go) and the configuration for the unit tests (ip_group-data_source_test.go) and in blue 🔵 the IP Group resource (ip_group_resource.go) and the unit tests for the same (ip_group_resource_test.go).

Interestingly, the data source unit tests call the resource unit test configurations to build and test resources. We’ll dig into that more soon.

I immediately copied the existing ip_group data source files and added ss to the names so they had unique names. I started walking through the sections just like I shared above, and had GitHub Copilot explain what each section of code was for, and then I went through each command and had Copilot explain it.

Go is Dense! (And sometimes, me too!)

Go is a dense language! There’s quite a few codeblocks like this. Now, this is only a few lines, but it’s doing SO MUCH!

One line 1, establish variable resp and err, and set equal (:=)to the first and second response of the client.Get call, and passing the rest of the attributes in. The client.Get code doesn’t live in this file, you have to find where client was established, and then follow the path to the source to find the Get function to see what we’re passing the data to.

For any object-oriented programmer, I know you’re laughing a bit — this is basic stuff. But it was new(-ish) to me!

Then on line 2 we check if the err attribute is set to nil (null), and if not, we return the error. The first time return is called in a Go function, the function exists. Return can be positive or negative depending on what your code expects. In this case any response other than nil is treated as an error, so printing out the problem will bubble up to the main terraform output.

| resp, err := client.Get(ctx, id.ResourceGroup, id.Name, "") | |

| if err != nil { | |

| return fmt.Errorf("%s was not found", id) | |

| } |

One of my first clues that this might be something that’ll work is here. Do you see it? The client object is set equal to a meta object that references an IPGroupsClient plural!

| func dataSourceIpGroupRead(d *pluginsdk.ResourceData, meta interface{}) error { | |

| client := meta.(*clients.Client).Network.IPGroupsClient |

I dug into this, hoping to find some function that could give me several IP groups somehow. I found the AzureRM provider clients reference here, which points at Network object here, which points to the IPGroupsClient object here, which is maintained by TomBuildsStuff, one of the maintainers of the AzureRM Provider code.

There I found a function called IPGroupsClient.ListByResourceGroup (ref) that lists all the IP Groups in a resource group! That’s huge, and the first hint of hope I needed that I can do this. If there is a function someone smart wrote that does what I need, I can use that.

func (client IPGroupsClient) ListByResourceGroup(ctx context.Context, resourceGroupName string) (result IPGroupListResultPage, err error)

Writing the New IP Groups Data Source

Let’s start writing our new data source, based on the single IP group one. I’ll skip the package and import sections, and we’ll even skip the primary function call. Let’s focus just on the logic of reading and outputting the info we need.

First we establish the child function called dataSourceIpGroupsRead, taking an input of d which is a pointer to the ResourceData sent to this function call, as well as an input of meta which points at a raw interface object. On the far right of line 2, you can see we return error. Generally if the return is nil, it’s considered a successful function call.

On line 5 we establish a client in the right context of the IP Groups client.

On line 8 we establish the context including the client to use to make calls and feed the IPGroupsClient client.

On line 9, we defer closing the context.

| // Find IDs and names of multiple IP Groups, filtered by name substring | |

| func dataSourceIpGroupsRead(d *pluginsdk.ResourceData, meta interface{}) error { | |

| // Establish a client to handle i/o operations against the API | |

| client := meta.(*clients.Client).Network.IPGroupsClient | |

| // Create a context for the request and defer cancellation | |

| ctx, cancel := timeouts.ForRead(meta.(*clients.Client).StopContext, d) | |

| defer cancel() |

Then we need to find the resource group name — remember that’s a required input for the ListByResourceGroup function call that returns all the groups? On line 2 we take the d object, which contains all the resource data sent to us by the primary terraform parent, and do a Get call to find the string called resource_group_name, and convert it to a string.

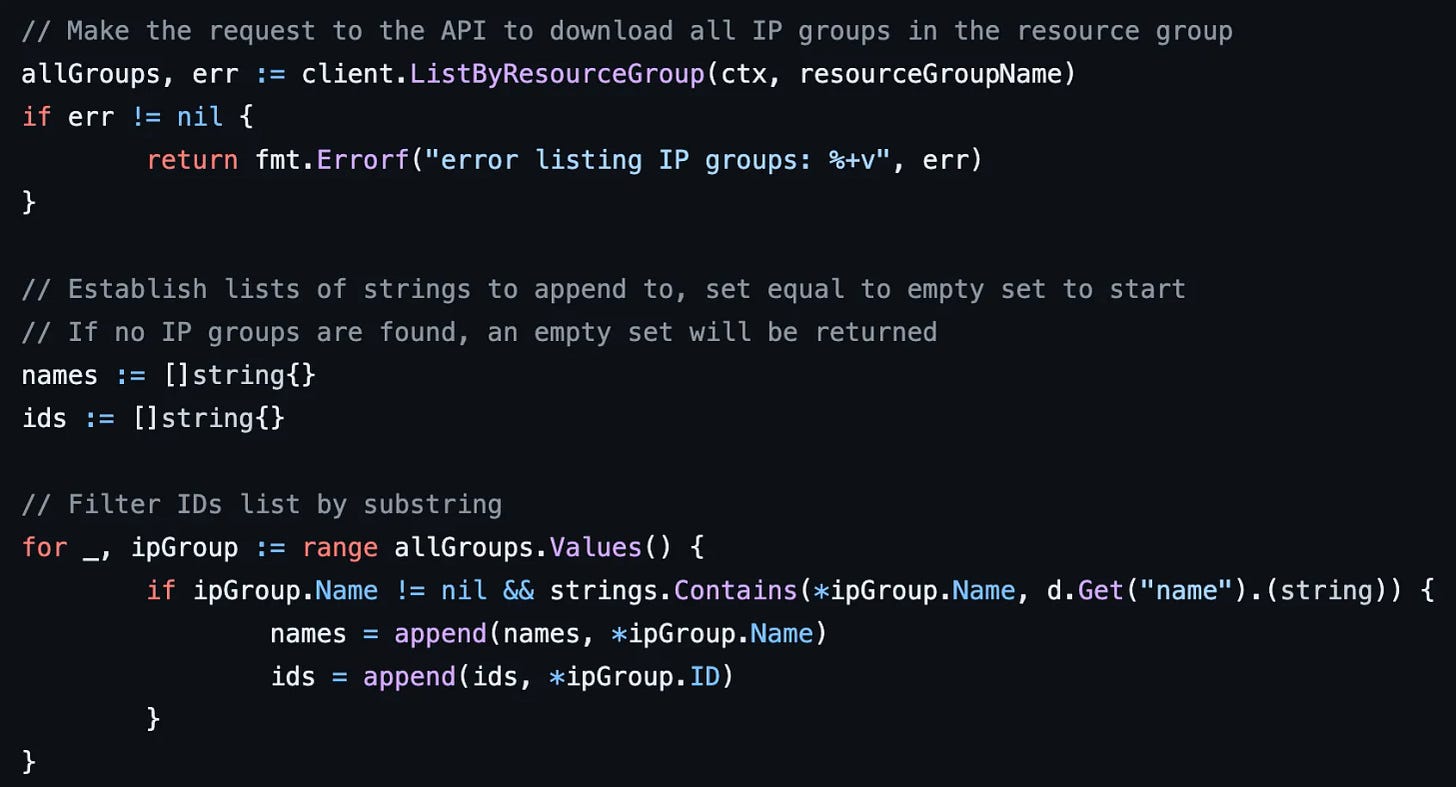

Then on line 5 we do something really cool. We establish a var called allGroups and set it equal to the response from the client.ListByResourceGroup call. We tell it which context to use, and we send in the resourceGroupName string.

The on line 6 we check to see if err has been populated with an error. If not, we assume our call worked, and we now have a list of all IP Groups in that resource group.

| // Get resource group name from data source | |

| resourceGroupName := d.Get("resource_group_name").(string) | |

| // Make the request to the API to download all IP groups in the resource group | |

| allGroups, err := client.ListByResourceGroup(ctx, resourceGroupName) | |

| if err != nil { | |

| return fmt.Errorf("error listing IP groups: %+v", err) | |

| } |

Next we establish two vars, names and ids. Both are set to an empty slice of strings. Slice is what Go calls lists.

Then on line 7 we start iterating through the list of allGroups, which is our list of IP Groups, and filtering them. Remember, the goal here is not to always return ALL IP Groups in a resource group, we only want to return ones which match the substring name that is sent from the parent function. So we check each one’s name attribute and if it matches, do two appends (line 9, 10) of its name and ID.

| // Establish lists of strings to append to, set equal to empty set to start | |

| // If no IP groups are found, an empty set will be returned | |

| names := []string{} | |

| ids := []string{} | |

| // Filter IDs list by substring | |

| for _, ipGroup := range allGroups.Values() { | |

| if ipGroup.Name != nil && strings.Contains(*ipGroup.Name, d.Get("name").(string)) { | |

| names = append(names, *ipGroup.Name) | |

| ids = append(ids, *ipGroup.ID) | |

| } | |

| } |

I found that the lists aren’t ordered, which makes me crazy, so we sort them. I hope everyone using this data source appreciates my organizational quirks 😆

On line 8 we look up the subscription ID from the client info.

Resources, even data sources, aren’t tracked in the terraform state (an absolute requirement for this data to be used at all) unless they have an ID. Since this data call should only ever be called once against each resource group, we use the ID of the resource group as an ID of this data call. Then we use the d.SetId function to set the id of the data source. This appends that information to the d object, which is what we received with all our info from the terraform parent call, and which we are now populating back as we run.

| // Sort lists of strings alphabetically | |

| slices.Sort(names) | |

| slices.Sort(ids) | |

| // Set resource ID, required for Terraform state | |

| // Since this is a multi-resource data source, we need to create a unique ID | |

| // Using the ID of the resource group | |

| subscriptionId := meta.(*clients.Client).Account.SubscriptionId | |

| id := commonids.NewResourceGroupID(subscriptionId, resourceGroupName) | |

| d.SetId(id.ID()) |

Then we set the names and IDs, and if we catch an error, we print it. This is probably overkill, but I wanted as much debugging as I could imagine.

Finally, on line 14, we return nil, which both terminates the function call (return) and tells teh parent that there was no issue running, and we exited in a happy state (error return = nil , so no error).

| // Set names | |

| err = d.Set("names", names) | |

| if err != nil { | |

| return fmt.Errorf("error setting names: %+v", err) | |

| } | |

| // Set IDs | |

| err = d.Set("ids", ids) | |

| if err != nil { | |

| return fmt.Errorf("error setting ids: %+v", err) | |

| } | |

| // Return nil error | |

| return nil |

Now we’ll go to the primary ip_groups data source function. This one is basically just a data transaction warehouse. It receives the d object from the terraform function, and calls the child function we just talked about above that goes and fetches data, then marries the two together and returns it to the terraform function with (hopefully more) info.

On line 3 you can see we’re calling the dataSourceIpGroupsRead function, which is the one we just wrote above.

On line 5 we set default timeouts, which is 10 minutes for a full read. These are over-rideable from the resource call.

And on line 9 we see the data schema we’re using for this data resource. It lists all fields that cna be filled, and what type of data it expects to put in them. You can see the name and resource_group_name fields that are received from terraform, and the computed ids and names fields that are populated by the child call.

| func dataSourceIpGroups() *pluginsdk.Resource { | |

| return &pluginsdk.Resource{ | |

| Read: dataSourceIpGroupsRead, | |

| Timeouts: &pluginsdk.ResourceTimeout{ | |

| Read: pluginsdk.DefaultTimeout(10 * time.Minute), | |

| }, | |

| Schema: map[string]*pluginsdk.Schema{ | |

| "name": { | |

| Type: pluginsdk.TypeString, | |

| Required: true, | |

| }, | |

| "resource_group_name": commonschema.ResourceGroupNameForDataSource(), | |

| "location": commonschema.LocationComputed(), | |

| "ids": { | |

| Type: pluginsdk.TypeList, | |

| Computed: true, | |

| Elem: &pluginsdk.Schema{Type: pluginsdk.TypeString}, | |

| }, | |

| "names": { | |

| Type: pluginsdk.TypeList, | |

| Computed: true, | |

| Elem: &pluginsdk.Schema{Type: pluginsdk.TypeString}, | |

| }, | |

| "tags": tags.SchemaDataSource(), | |

| }, | |

| } | |

| } |

When you save this file, your editor will populate the import block at the top with any libraries required to run your code. If any are underlined in red, you’ll see that your local editor doesn’t have them loaded. To populate them, open a terminal in your workspace (which again, must just have this repo open only) and run:

go getAnd it’ll fetch and cache all dependencies of your project.

Does It Work? AKA How Do We Test This?

Well, does it actually work? Normally for non-compiled programs I wrote I just… run them, and print stuff as we go. However, Go is a compiled language, so we have to compile the product to work in that way.

Which works, but it’s slow! At least on my computer, to compile the entire Terraform provider takes 10–15 minutes. That’s a really slow turn-around time, particularly with me being so new/n00by to Go. I’ll walk through these steps later, but first we have to start understanding some testing methodology.

Thankfully, Go has a robust testing suite — after all, given it came out of Google, Terraform is not the most complex tool it builds and needs to test. It supports targeted compile and validation testing, we just have to know how to do it.

First let’s find the tests. Open up the ip_groups_data_source_test.go file and let’s start breaking this down. We’ll read it from the bottom up, just like the resource. First of all, there is an acceptance test, that basically just builds some reasources and tears them down. The %s indicates that this is just plain terraform text suspended in the Go function to do a data lookup using the new functionality we’re building with azurerm_ip_groups.

The goal here is to identify any possible use cases and test for them. I was genuinely curious what would happen if there was no match, so let’s test for that.

| func (IPGroupsDataSource) noResults(data acceptance.TestData) string { | |

| return fmt.Sprintf(` | |

| %s | |

| data "azurerm_ip_groups" "test" { | |

| name = "doesNotExist" | |

| resource_group_name = azurerm_resource_group.test.name | |

| depends_on = [ | |

| azurerm_ip_group.test, | |

| ] | |

| } | |

| `, IPGroupResource{}.basic(data)) | |

| } |

You can see that at line 12 it’s loading even more configuration, calling the IPGroupResource function “basic” resource suite. There’s actually even more code that is appended on — this code already exists for my use case, because some smart person has already written the IP Group Resource, and as part of that there is some testing data to build the resource. So let’s use it. Here’s what we’re calling (link).

This is a Go function that’s called in another file, but you can see some simple terraform syntax here — it’s building a provider, and a resource_group — note the location is %s which is an arg passed to this terraform via Go, and also the name of the resource-group has a %d which is interpolated to a random string before being passed to terraform. That means you can build this many times concurrently if needed (which would sometimes be required if lots of tests use this same config primitive).

It also builds an ip_group with the name acceptanceTestIpGroup1 on line 13. Note that my test above is looking for the wrong name — that’s intended, since our test is to find an IP Group that doesn’t exist, e.g. not matching any of the IP Groups that are built.

Expert tip: Terraform doesn’t print out the normal “error debug” messages when running it this way that you’re used to, like if you’re lacking a depends_on arg in the above code so we try to do a data lookup before the IP group is built, and can lead you to wasting many hours/days on what’s going wrong, when really you can just copy this terraform code to a regular terraform run and look at the potential errors that it spits out. Ask me how I know 🙃

| func (IPGroupResource) basic(data acceptance.TestData) string { | |

| return fmt.Sprintf(` | |

| provider "azurerm" { | |

| features {} | |

| } | |

| resource "azurerm_resource_group" "test" { | |

| name = "acctestRG-network-%d" | |

| location = "%s" | |

| } | |

| resource "azurerm_ip_group" "test" { | |

| name = "acceptanceTestIpGroup1" | |

| location = azurerm_resource_group.test.location | |

| resource_group_name = azurerm_resource_group.test.name | |

| } | |

| `, data.RandomInteger, data.Locations.Primary) | |

| } |

Okay, now we’ve seen the whole config. Let’s look at what we’re actually testing. The code is here. This is a single go “test” — it calls the IPGroupsDataSource function with the noResults method. It’ll execute that code, which means it’ll actually go build resources in Azure, which is super cool, really.

It also lists , on line 9, 10 what values mean the test is a success 💚. I haven’t been able to find any formal docs (neither could Copilot), but I inferred that lines 9, 10 are checking that the single resource item you’re building should have keys “ids” and “names” and their count (denoted by #) should have a count of 0.

If anyone has more info on what validation tests are available (I also see

%which appears to work on key/value pair counts?), please leave that info in the comments!

| func TestAccDataSourceIPGroups_noResults(t *testing.T) { | |

| data := acceptance.BuildTestData(t, "data.azurerm_ip_groups", "test") | |

| r := IPGroupsDataSource{} | |

| data.DataSourceTest(t, []acceptance.TestStep{ | |

| { | |

| Config: r.noResults(data), | |

| Check: acceptance.ComposeTestCheckFunc( | |

| check.That(data.ResourceName).Key("ids.#").HasValue("0"), | |

| check.That(data.ResourceName).Key("names.#").HasValue("0"), | |

| ), | |

| }, | |

| }) | |

| } |

If you hover over the function in Visual Studio Code with the Go extension installed, it’ll show how you can run test or debug test which is awesome, but we’re not quite ready yet. Remember, this test will build resources in Azure! But like, which Azure? Which permissions? We have to pass the auth config to this test so it can do that.

You can optionally create a .env file in the root of the workspace (in the local repo). It’ll be ignored by the .gitignore file already so it won’t be committed that looks like the following. Note this information will align with an App Registration/Service Principal in Azure in a testing tenant. Your AppReg requires to be assigned permissions to the subscription so it can create the required resources.

ARM_CLIENT_ID=6aa4935e-xxxxxx

ARM_CLIENT_SECRET='7iQ8Q~xxxxxxx'

ARM_SUBSCRIPTION_ID=ca578a05-xxxxx

ARM_TENANT_ID=722653a2-xxxxx

ARM_ENVIRONMENT=public

ARM_METADATA_HOST=

ARM_TEST_LOCATION="east us"

ARM_TEST_LOCATION_ALT="west us"

ARM_TEST_LOCATION_ALT2="central us"Then you can click run_test in your VSC to run a single test and see the results. I’m much more a fan of running the tests from the terminal, so let’s walk through how that’d work. First export the same information to your terminal.

export ARM_CLIENT_ID=6aa4935e-xxxxxx

export ARM_CLIENT_SECRET='7iQ8Q~xxxxxxx'

export ARM_SUBSCRIPTION_ID=ca578a05-xxxxx

export ARM_TENANT_ID=722653a2-xxxxx

export ARM_ENVIRONMENT=public

export ARM_METADATA_HOST=

export ARM_TEST_LOCATION="east us"

export ARM_TEST_LOCATION_ALT="west us"

export ARM_TEST_LOCATION_ALT2="central us"And then you can run the testing suite from the same terminal window. The TESTARGS=`-run=xxx` is a substring match, so any partial string match will be treated as a match. For instance, this command will run:

make acctests SERVICE='network' TESTARGS='-run=TestAccDataSourceIPGroups_' TESTTIMEOUT='10m'Here’s an example results. You can see that it found 3 tests, ran them concurrently (you’ll actually see 3 different resource groups get created in your tenant if you refresh quickly, they’re fast!), and then automatically get destroyed.

Since all 3 tests pass, the ultimate result near the bottom is PASS. Notably, this is the information required to be provided in your Pull Request to confirm your new functionality actually works.

❯ make acctests SERVICE='network' TESTARGS='-run=TestAccDataSourceIPGroups_' TESTTIMEOUT='10m'

==> Checking that code complies with gofmt requirements...

==> Checking that Custom Timeouts are used...

==> Checking that acceptance test packages are used...

TF_ACC=1 go test -v ./internal/services/network -run=TestAccDataSourceIPGroups_ -timeout 10m -ldflags="-X=github.com/hashicorp/terraform-provider-azurerm/version.ProviderVersion=acc"

=== RUN TestAccDataSourceIPGroups_noResults

=== PAUSE TestAccDataSourceIPGroups_noResults

=== RUN TestAccDataSourceIPGroups_single

=== PAUSE TestAccDataSourceIPGroups_single

=== RUN TestAccDataSourceIPGroups_multiple

=== PAUSE TestAccDataSourceIPGroups_multiple

=== CONT TestAccDataSourceIPGroups_noResults

=== CONT TestAccDataSourceIPGroups_multiple

=== CONT TestAccDataSourceIPGroups_single

--- PASS: TestAccDataSourceIPGroups_single (68.40s)

--- PASS: TestAccDataSourceIPGroups_noResults (121.00s)

--- PASS: TestAccDataSourceIPGroups_multiple (122.55s)

PASS

ok github.com/hashicorp/terraform-provider-azurerm/internal/services/network 127.541sCompile the Local Provider

But does it actually work? I mean, tests are all well and good, but like, I want to have a simple terraform config and see it actually do the thing! So let’s do that.

Building the whole provider is pretty simple — you run make build and it’ll comile the whole provider and output an executable on your computer in your $GOPATH . If you don’t set that var in your terminal as anything, it’ll default to your $HOMEDIR/go.

Remember, this will take a while! On my 2021 M1 mac, it takes about 10–15 minutes.

make buildIf there’s an error, it’ll print it and fail to create the binary. If it succeeds, it’ll output the binary here:

❯ ls -lah ~/go/bin/ | grep azurerm

-rwxr-xr-x 1 kyler staff 345M Jan 18 12:11 terraform-provider-azurermBy default, terraform doesn’t check this directory before running — it’ll still go to the Hashi public registry by default to download the provider, which doesn’t help us. We want it to use our new shiny one, so we have to tell it to. Create a file in your homedir called exactly .terraformrc and populate it with the following config. Of course update the path to the name of your user, rather than kyler. Save it and your local terraform runs no longer go install from remote.

provider_installation {

# Use /home/developer/go/bin as an overridden package directory

# for the hashicorp/azurerm provider. This disables the version and checksum

# verifications for this provider and forces Terraform to look for the

# azurerm provider plugin in the given directory.

dev_overrides {

"hashicorp/azurerm" = "/Users/kyler/go/bin"

}

# For all other providers, install them directly from their origin provider

# registries as normal. If you omit this, Terraform will _only_ use

# the dev_overrides block, and so no other providers will be available.

direct {}

}Let’s create an example terraform config to use the new functionality. Note that we’re not pointing at any local binary, that’s all handled outside of our terraform code.

| provider "azurerm" { | |

| features {} | |

| } | |

| data "azurerm_ip_groups" "ip_groups" { | |

| resource_group_name = "rg-name" | |

| name = "IP_List_Number_" # Partial sub-string match | |

| } | |

| output "ip_group_ids" { | |

| value = data.azurerm_ip_groups.ip_groups.ids | |

| } | |

| output "ip_group_names" { | |

| value = data.azurerm_ip_groups.ip_groups.names | |

| } |

When running the terraform plan, it’s clearly using a local provider, first because it doesn’t immediately exit to say you’re using a resource that doesn’t exist (the new one we built), and second because of this great warn that we’re using a locally compiled provider.

And you can see it actually works! 🚀 🔥

❯ terraform plan

╷

│ Warning: Provider development overrides are in effect

│

│ The following provider development overrides are set in the CLI configuration:

│ - hashicorp/azurerm in /Users/kyler/go/bin

│

│ The behavior may therefore not match any released version of the provider and applying

│ changes may cause the state to become incompatible with published releases.

╵

data.azurerm_ip_groups.ip_groups: Reading...

data.azurerm_ip_groups.ip_groups: Read complete after 5s [id=/subscriptions/797446e8-xxxx/resourceGroups/xxxxx]

Changes to Outputs:

+ ip_group_ids = [

+ "/subscriptions/797446e8-xxxx/resourceGroups/net-rg/providers/Microsoft.Network/ipGroups/Allow_IPs_01",

+ "/subscriptions/797446e8-xxxx/resourceGroups/net-rg/providers/Microsoft.Network/ipGroups/Allow_IPs_02",

+ "/subscriptions/797446e8-xxxx/resourceGroups/net-rg/providers/Microsoft.Network/ipGroups/Allow_IPs_03",

]

+ ip_group_names = [

+ "Allow_IPs_01",

+ "Allow_IPs_02",

+ "Allow_IPs_03",

]The AzureRM v3.89.0 provider has now been released, and you can use this functionality without any of this adventuring. Please do :D

Summary

In this blog we walked through confirming that a functionality we wanted out of the AzureRM provider wasn’t present, even checking in the source code. We poked at the internals of the API code in the provider and saw that there was multi-group functionality present, which gave us hope.

We built the files and functionality to find IP Groups in Azure, then filter them based on a name substring — and we even sorted them!

We wrote tests for the new functionality and learned to run them and read the results, in both VSC and in the terminal.

Then we compiled the provider, told our Terraform to use it for local runs, and made sure it all worked properly.

I hope this was as much fun for you as it was for me. Please go forth and build cool stuff. Thanks ya’ll.

Good luck out there.

kyler